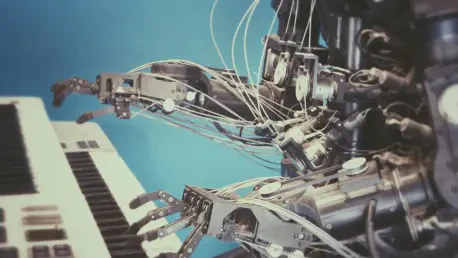

In the cutting-edge realm of AI and robotics, Oscar Vail stands out as a visionary expert navigating the boundaries of technology. Known for his work with emerging sectors like quantum computing and robotics, Vail often pushes the envelope of what’s possible. Today, we delve into the world of MotionGlot, a transformative AI model reshaping how we perceive motion in robots and avatars.

Can you explain what MotionGlot is and how it works?

MotionGlot is an innovative model designed to generate motion trajectories for various embodiments, such as quadrupeds and humans, from simple text commands. Unlike language-only models, it interprets instructions as actions, creating an accurate visual representation of movements. The bridge between command and action is seamlessly established through a process that resembles language translation, but in this case, with the focus on motion.

What are the key advancements that MotionGlot brings to the field of robotics and animation?

MotionGlot offers a groundbreaking ability to convert text-based commands into relevant movements for different robotic forms. This capacity allows for dynamic motion generation, whether for bipedal humanoids or quadrupedal robots, thus broadening the horizons for applications in human-robot interactions and virtual worlds. The novelty lies in its adaptability across various spatial contexts and its method of handling embodiment translation with remarkable fluidity.

How did you train MotionGlot to understand and generate diverse movements?

To endow MotionGlot with its versatile abilities, we utilized comprehensive datasets comprising annotated motion sequences for quadrupeds and humans. The QUAD-LOCO and QUES-CAP datasets play a pivotal role here, providing detailed descriptions that guide the model. Even unencountered commands are successfully executed due to the expansive training, which even allows the model to extrapolate to novel scenarios.

What practical applications do you foresee for MotionGlot?

The potential applications are vast and varied, spanning from enhanced human-robot collaboration to immersive virtual reality environments and advanced digital animations. In collaborative settings, MotionGlot could enable smoother coordination between humans and machines. Meanwhile, in the domains of gaming and digital animation, the ability to generate realistic, responsive movements opens new creative avenues.

Can MotionGlot use motion to answer questions, and if so, how does that process work?

Yes, MotionGlot possesses the unique capability to respond to inquiries through motion representation. It interprets the context of a question to craft a motion output that corresponds to the intended meaning. For instance, when prompted about cardio activity, it animates a jogging sequence. Even abstract queries, like depicting “a robot walking happily,” are met with appropriately expressive movements.

What challenges did you face while developing MotionGlot, and how did you overcome them?

The primary challenge was ensuring accurate motion translation across diverse physical embodiments. Each body type moves differently, necessitating a sophisticated model capable of understanding and adapting to these variations. Overcoming this involved iterative refinements and extensive dataset training to ensure adaptability and consistency in motion styles.

What are your plans for future developments with MotionGlot?

Looking ahead, the roadmap includes expanding the availability of MotionGlot’s platform by making its model and source code open for researchers. This transparency aims to foster collaboration and spur innovation. Moreover, scaling the model through larger, more diverse datasets will enhance its capabilities further, potentially revolutionizing the motion generation landscape.

How do you envision the role of MotionGlot in advancing the field of AI-generated motion?

MotionGlot is poised to make substantial contributions to industries heavily reliant on robotics and animation. By bridging the gap between linguistic commands and physical actions, it not only simplifies the creation process but also enriches the interactivity and realism of robotic systems and animated content. As language models converge with motion technology, we anticipate more intuitive and natural human-robot interfaces, empowering novel applications and user experiences.

Do you have any advice for our readers?

For those interested in the intersection of AI and robotics, I encourage embracing an open mindset towards innovation and collaboration. With technology evolving rapidly, staying informed and actively engaging with the community can yield unexpected insights and opportunities. Above all, don’t hesitate to experiment and iterate within this dynamic field—it’s how breakthroughs are made.