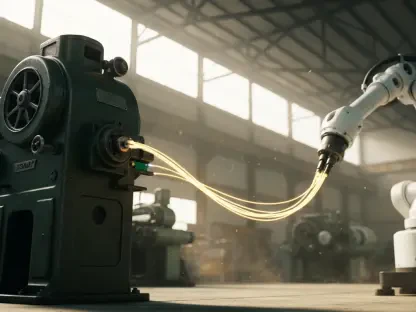

The dawn of multimodal Artificial Intelligence (AI) marks a revolutionary leap in the technological sphere, offering profound capabilities that blend various data types into coherent and actionable insights. Unlike traditional AI systems limited to handling one form of data, multimodal AI integrates diverse input forms such as text, images, audio, video, and numerical data, mirroring human cognitive ability to synthesize multiple sensory inputs. This transformation is not merely theoretical; practical applications are already burgeoning across multiple sectors, showcasing the vast potential of multimodal systems to enhance decision-making, efficiency, and innovation. A tangible example illustrating the power of multimodal AI features a humanoid robot effectively processing voice commands and visual inputs to perform interactive tasks. This advancement underscores a broader shift where AI technologies are evolving from singular data reliance to crafting more nuanced, contextual understanding by assimilating a rich tapestry of input streams, enhancing both machine capability and human collaboration.

Practical Applications and Industry Transformations

Multimodal AI is already reshaping industries by revolutionizing how businesses operate and interact with customers, from e-commerce and healthcare to autonomous vehicles. In the realm of e-commerce, AI bridges visual and text data, enabling more sophisticated customer interactions through visual searches, augmented reality experiences, and improved customer support systems that enhance user engagement and satisfaction. Google’s Gemini exemplifies these capabilities by leveraging vision, audio, and textual inputs to manage complex tasks seamlessly, thereby transforming how consumers engage digitally by providing a more integrated and intuitive experience. Likewise, Amazon’s StyleSnap deftly combines computer vision and natural language processing to suggest personalized fashion choices, showcasing how AI’s multimodality is not only meeting but anticipating customer needs.

In healthcare, the potential of multimodal AI is particularly promising, with applications in medical diagnostics and patient management gaining traction. PathAI, for instance, exemplifies the integration of image and clinical data interpretation capabilities, offering medical professionals enhanced diagnostic accuracy which is critical in patient treatment. By synthesizing diverse data modes, healthcare systems are poised to offer more personalized care and predictive analytics, thereby facilitating improved patient outcomes and streamlining medical research. Furthermore, autonomous vehicles epitomize the real-world utility of multimodal AI by harnessing a suite of sensors, cameras, and GPS data to safely navigate complex environments, setting a new precedent for transportation innovation and safety standards.

Technical Evolutions and Architectures

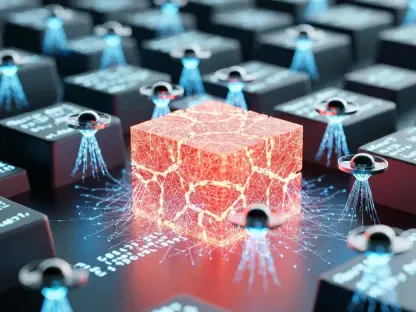

Multimodal AI’s impressive capabilities are deeply rooted in its technical architecture, which enables the sophisticated processing and synthesis of varied data inputs. At its core, this architecture is typically composed of three crucial modules: the input, fusion, and output modules. Each plays a pivotal role in ensuring the smooth conversion of raw data into actionable intelligence. The input module is responsible for collecting and processing data from disparate sources using unimodal neural networks tailored to specific data forms. This initial step is crucial as it ensures that data of varied origins is accurately captured and pre-processed for subsequent stages.

Following data input, the fusion module consolidates these diverse data sets into a unified format, typically through numerical embeddings that facilitate cross-modal interaction. This alignment is critical; it ensures that data is comparably understood within the AI system, allowing for seamless integration across different data types. The success of this process heavily depends on the robustness of these embeddings and the system’s ability to bridge disparities between different forms of data. Finally, the output module synthesizes this integrated data to generate comprehensive responses. These outputs can range from predictive analytics and decision-making aids to generative content like videos or interactive experiences, reflecting the versatile potential of multimodal AI systems.

Challenges and Future Prospects

Despite its potential, the path to fully realizing multimodal AI’s capabilities is fraught with challenges that need strategic navigation. One of the primary hurdles is the technical complexity involved in aligning and amalgamating data from various modalities, which requires advanced methodologies to ensure seamless interaction and synthesis. Ensuring that these systems can effectively interpret the semantics of different data types is paramount, necessitating continuous refinement and sophisticated interpretative algorithms. Another significant challenge is data quality; multimodal AI systems rely heavily on high-quality, ethical data sourcing. Ongoing improvements are essential to ensure these systems can interpret complex subtleties such as intent and emotion, which are often culturally or contextually nuanced.

Moreover, while these AI systems are equipped to process and synthesize vast amounts of data, they also require rigorous testing and fine-tuning to mitigate risks and improve accuracy. Techniques like reinforcement learning and adversarial testing are integral in refining multimodal AI systems, ensuring that they perform safely and effectively in real-world scenarios. Despite these obstacles, the future of multimodal AI is replete with potential for innovation. Its capacity to foster intelligent, interactive systems that emulate human cognitive processes opens new frontiers for application across industries, transforming not only how businesses operate but also how technology integrates into everyday life.

Implications and Conclusion

The emergence of multimodal Artificial Intelligence (AI) represents a significant advancement in technology, offering remarkable capabilities that integrate different types of data into clear and actionable insights. Unlike traditional AI, which is restricted to a single form of data, multimodal AI combines various inputs like text, images, audio, video, and numerical data. This process mirrors human cognition, which synthesizes sensory information from multiple sources. This evolution is no longer theoretical as practical applications are blooming across numerous fields, highlighting the vast potential of multimodal systems to boost decision-making, efficiency, and innovation. Consider a humanoid robot, effectively processing voice commands and visual cues to perform interactive tasks; it illustrates multimodal AI’s strength. This progression indicates a major shift from relying on singular data streams to forming a more nuanced understanding by integrating diverse inputs, thus enhancing machine capabilities and fostering human collaboration.