Engaging in a conversation with Oscar Vail, a technology expert well-versed in emerging fields like quantum computing, robotics, and open-source projects, provides a remarkable opportunity to delve into the innovations around the 3D-GRAND dataset. This new dataset enhances the capability of embodied AI, specifically in connecting language with 3D spaces, thus marking a significant leap in how household robots and AI systems interact with their environments.

Can you explain the significance of the 3D-GRAND dataset in the development of embodied AI?

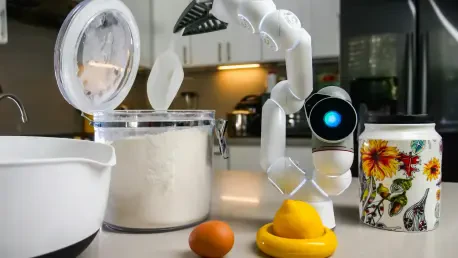

The 3D-GRAND dataset is a groundbreaking tool in embodying AI for household robots, as it essentially bridges the gap between language and 3D environments. By providing a plethora of synthetically generated rooms with corresponding annotated 3D structures, it allows robots and AI systems to better understand and navigate the complex spatial terms that humans use. This dataset offers a robust platform for AI to learn how spatial language correlates to the 3D world, ultimately leading to more intuitive interactions between robots and humans.

What specific challenges does 3D-GRAND address in connecting language to 3D spaces?

One of the major hurdles is the scarcity of 3D data linked with meaningful language data. Traditional datasets often struggle to articulate the specific spatial relationships and object orientations that occur in a 3D environment. 3D-GRAND tackles this by ensuring dense annotations that assign real-world coordinates to objects within scenes, enabling AI to better understand and visualize how language refers to specific spaces and objects.

How does the 3D-GRAND dataset improve the accuracy and performance of models compared to previous 3D datasets?

3D-GRAND significantly elevates grounding accuracy and reduces hallucinations when compared with prior datasets. Models trained on 3D-GRAND have shown a marked improvement by achieving a 38% grounding accuracy, surpassing other models by a notable margin. This is complemented by a reduction in hallucination rates, achieving only around 6.67% compared to previous standards, allowing for more reliable interactions and decisions by the AI systems.

What are some limitations of existing datasets that 3D-GRAND aims to overcome?

Existing datasets often grapple with high expenses, time-consuming processes, and limited scalability because they rely heavily on manual annotations—specific object and language linkages within a 3D environment are tedious to create this way. 3D-GRAND circumvents this through synthetic data that allows for automation in the annotation process, drastically cutting down time and costs and achieving vast scalability.

Could you discuss the role of generative AI in creating the synthetic rooms within the 3D-GRAND dataset?

Generative AI plays a pivotal role in the 3D-GRAND dataset by crafting synthetic room scenes along with their data. This technology employs AI to automatically generate intricate, detailed 3D environments, effectively making the need for manual photorealistic modeling redundant. These rooms are then automatically annotated based on AI’s intrinsic knowledge of objects and spatial relationships, ensuring each scene is ready to be used for further AI training purposes.

How does the automatic annotation process work for these synthetic rooms and what advantages does it offer?

The automatic annotation process leverages AI models to immediately recognize and label objects as soon as they are created within synthetically generated rooms. This removes the need for human intervention and ensures that every object and its spatial relationship are accurately logged, offering significant advantages in speed, cost, and breadth of data collection—such large-scale data is crucial for training more sophisticated AI systems.

What techniques are used to ensure that each noun phrase in the dataset is grounded to specific 3D objects?

To ground noun phrases accurately, 3D-GRAND utilizes scene graphs and natural language processing models, which map out how objects relate to one another in a scene. These technologies identify and correlate each phrase uniquely to its corresponding 3D objects, thereby ensuring that language descriptions are perfectly aligned with their physical representations.

Could you elaborate on how the hallucination filter contributes to improving the quality of the dataset?

The hallucination filter is essential in verifying that the textual descriptions generated by AI are backed by actual 3D structures within the scenes. This means that any details mentioned in text have a corresponding object represented in the 3D environment, thus minimizing inaccuracies and maintaining the integrity of the dataset.

Why is the error rate of synthetic annotations within 5% to 8% considered reliable or comparable to human annotations?

Achieving an error rate of only 5% to 8% is considered excellent given the scale and complexity of these annotations. This statistic places synthetic annotations on par with professional human annotations, offering an automated solution that is both time-efficient and reliable, which is crucial to maintaining the quality required for high-level AI model training.

Can you compare the time and cost efficiency of LLM-based annotation versus traditional human annotation methods?

LLM-based annotation vastly outperforms traditional methods due to its ability to manage millions of annotations within a remarkably short timeframe—just two days, in this instance. This efficiency translates into reduced operational costs and time investment, bringing down expenses substantially while still maintaining high annotation quality.

How does the ScanRefer benchmark evaluate grounding accuracy for models trained on the 3D-GRAND dataset?

ScanRefer assesses grounding accuracy by measuring how well the predicted bounding boxes align with true object boundaries in the scenes. It helps in gauging how accurately models trained with the 3D-GRAND dataset can associate spatial descriptions to correct objects, ensuring that the interactions in 3D spaces are legitimate and practical for application.

What is the purpose of the 3D-POPE benchmark in the evaluation of object hallucinations?

The 3D-POPE benchmark specifically addresses the issue of hallucinations, assessing the frequency and nature of inaccuracies where AI might suspect objects where none exist. By using this benchmark, the dataset can refine its quality, ensuring that AI models developed are realistic and adhere closely to real-world scenarios.

How do the grounding accuracy and hallucination rate of models trained on 3D-GRAND compare to other baseline models?

Models trained on 3D-GRAND show superior grounding accuracy and a significantly reduced hallucination rate compared to other baseline models. The dataset pushes models to achieve better precision in understanding language and spatial associations, thereby outclassing other established models which do not match these comprehensive metrics.

In what ways do you expect 3D-GRAND to impact the interaction between household robots and humans?

3D-GRAND is set to elevate the way household robots understand and interact with the physical world, allowing them to interpret complex instructions that we naturally give. This will transform daily interactions, as robots will be more adept at perceiving and executing tasks that involve nuanced language and spatial understanding, making them valuable assistants in our homes.

What future steps are planned to test and validate the effectiveness of 3D-GRAND on actual robotic systems?

The logical next step involves integrating the insights gained from 3D-GRAND within real-world robotic systems. This will involve rigorous testing to see how these AI models operate at handling live spatial data and responding to natural language commands accurately. Through continuous iteration and field trials, the dataset’s potential can be validated and refined, unlocking new capabilities for embodied AI.