The simple, unconscious act of picking up a fragile glass bottle and placing it gently into a crate represents a monumental challenge for traditional robotics, a task so fraught with variables that it has long remained beyond the reach of automated systems. This barrier, however, is beginning to crumble, not through more powerful motors or more rigid programming, but through a profound shift in philosophy. Researchers are now building robots that learn with the fluidity of a human apprentice, observing our movements and understanding the physical world through experience rather than code. This emerging paradigm is poised to unlock the potential of robotics, moving it from the predictable confines of the assembly line into the dynamic, unstructured environments where human dexterity has always been king.

The Dawn of a New Robotic Era

The transition from pre-programmed machines to learning robots marks the beginning of a new chapter in automation. For decades, the archetypal robot has been a powerful, precise, but ultimately unintelligent arm, blindly repeating a single sequence of motions inside a safety cage. The new generation of robots, however, is being designed to perceive, adapt, and generalize. By watching a human perform a task, these machines can infer the underlying goal and develop the skills to replicate it, even under slightly different conditions. This leap from rote memorization to genuine comprehension is not merely an incremental upgrade; it is a fundamental redefinition of what a robot can be and what it can do.

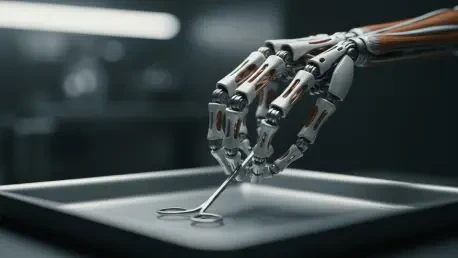

This advancement holds immense significance for industries that have long been considered too complex or delicate for automation. Fields such as logistics, agriculture, and healthcare require machines that can handle a vast variety of objects with care and precision, tasks that are impossible to pre-program due to the sheer number of potential scenarios. Whether it is sorting a bin of mixed vegetables, assisting a surgeon with delicate instruments, or packing a box with fragile goods, the ability to learn by observation allows robots to tackle challenges that demand adaptability and a light touch. Consequently, this technology is breaking robotics out of its industrial cage and preparing it for a collaborative future in the human world.

From Rigid Automation to Fluid Learning

Historically, industrial robots have been paragons of reliability within highly structured settings. Their success was built on the principles of control engineering, where every movement is meticulously calculated and encoded based on precise physical models of the robot and its environment. On a car assembly line, where the same part arrives in the exact same orientation every time, this approach is incredibly effective. However, the moment unpredictability is introduced—a slightly different bottle shape, a shifted position, a cluttered workspace—this rigid automation fails spectacularly. The cost and complexity of reprogramming a robot for every minor variation make it impractical for most real-world applications.

Faced with these limitations, the field of robotics has increasingly turned away from classical engineering and toward data-driven machine learning. This pivot represents a move from telling a robot exactly how to perform a task to showing it what the task looks like and allowing it to figure out the how on its own. Instead of relying on a flawless model of the world, a learning-based robot builds its own internal understanding through data and experience. This approach endows the machine with a form of intuition, enabling it to generalize its skills to new objects and situations it has never encountered, thereby overcoming the brittleness that has long defined industrial automation.

The Blueprint for Dexterity How AI Robots Learn

At the heart of this revolution are research hubs like ETH Zurich, where scientists are engineering the essential components of these new machines: a physically adaptive body and an intelligent, learning brain. The work being done in these labs illustrates a powerful convergence of hardware innovation and software intelligence, where breakthroughs in one domain amplify the potential of the other. By creating systems where a sophisticated physical form is controlled by an equally sophisticated learning algorithm, researchers are laying the groundwork for robots with truly human-like dexterity. The methodologies they employ—drawing inspiration from biology, mimicking human teachers, and learning from virtual experience—collectively form the blueprint for the next generation of robotics.

Bio-Inspired Hardware The Body

A key part of this blueprint is the radical rethinking of the robot’s physical form, a philosophy championed by Robert Katzschmann at the Soft Robotics Lab. His team is moving away from the conventional design of rigid metal limbs with motors embedded directly in the joints. Instead, they are developing musculoskeletal robotic hands that more closely mirror human anatomy. These hands feature fingers actuated by a network of artificial tendons that run through complex rolling joints, granting them a natural suppleness and range of motion.

This bio-inspired design is not merely for aesthetic purposes; it is a functional necessity for achieving true dexterity. By constructing robotic bodies from a strategic combination of soft and rigid materials, Katzschmann’s team creates machines with inherent compliance. This allows them to interact with objects and their environment more safely and adaptively than their rigid counterparts. Katzschmann argues that a robot’s physical body is just as critical as its controlling AI. He posits that nature has already perfected the design for versatile and stable systems—using muscles for softness and skeletons for load-bearing—and that emulating these principles is essential for creating robots that can generalize their skills effectively in the real world.

Imitation Learning The Brain

With an adaptive body in place, the next challenge is to endow it with an intelligent brain. One of the most effective ways to do this is through imitation learning, a technique being perfected in Katzschmann’s lab. Here, the robot learns by watching a human demonstrator. An operator wears a specialized glove outfitted with motion sensors and a camera, which captures the precise movements of their hand and fingers as they perform a task, such as grasping a bottle. This demonstration is recorded from multiple angles by external cameras, creating a rich dataset of successful actions.

This collected data is then used to train a powerful AI model known as a transformer, an architecture similar to that used in large language models. The transformer does not simply learn to mimic the exact movements it was shown; it learns the underlying principles of the task. This enables the robotic hand to generalize its knowledge, allowing it to grasp new objects of different shapes and sizes or to adapt if the target destination is moved. This learned approach bypasses the need for laborious manual programming and the creation of detailed 3D models of the environment, resulting in a remarkably flexible and adaptive system. This technology has proven so successful that it now forms the foundation of Mimic Robotics, an ETH spin-off co-founded by Katzschmann.

Reinforcement Learning The Experience

Complementing imitation learning is reinforcement learning (RL), a method that allows a robot to learn through trial and error. Computer scientist Stelian Coros likens this process to a person learning to play tennis: the robot attempts a task, receives a “reward” for successful outcomes, and continuously refines its strategy to maximize that reward. Coros emphasizes that robots, unlike some AI systems that learn from static datasets, must learn by doing. Passive observation is not enough; a physical machine needs to build an understanding of cause and effect through direct interaction with the world.

To generate the vast amount of experience required for effective RL, researchers are turning to large-scale virtual simulations. At the Robotics Systems Lab (RSL), senior scientist Cesar Cadena explains that thanks to advances in graphics processors (GPUs), his team can now run thousands of virtual robots in parallel in the cloud. This allows them to generate as much training data in a single hour as they could in an entire year just a few years ago. While this cloud-based training is incredibly powerful, it presents a challenge for autonomous robots operating in areas without network connectivity, such as a disaster zone. To address this, researchers are developing hybrid systems that install a portion of the learned models and computational power directly onto the robot, enabling it to make rapid, localized decisions when disconnected from the cloud.

A Tale of Two Philosophies Pure Data vs Hybrid Models

While the AI boom has been transformative, it has also sparked a debate within the robotics community about the most effective path forward. Some experts, like Stelian Coros, view the current trend as an “evolution” rather than a “revolution,” arguing that the data required for robotics is fundamentally different from the abstract data that fuels language models. A robot has a physical body and must learn to generalize movements through physical interaction, a far more complex and data-intensive process than generalizing from text or images.

Coros is a vocal critic of a purely data-driven approach, which he deems “fundamentally unscalable.” He points to research where a robot required approximately 10,000 hours of human demonstrations to learn the single, specific skill of folding a shirt—and even then, it was prone to errors. Relying solely on learned behaviors for every task would require an astronomical amount of training data, making it impractical for creating general-purpose robots. This bottleneck has led many researchers to question whether simply adding more data is the solution to every problem in robotics.

In response, Coros’s group has championed a hybrid model that integrates machine learning with the fundamental laws of physics. In this approach, AI is used to fill in the gaps where physical interactions are too complex to model perfectly, while established physical principles are used to fill in the gaps where demonstration data is sparse. For example, when teaching a robot to throw a ball, it does not need to see thousands of throws to different targets. Instead, it can use its understanding of projectile motion to calculate the necessary trajectory, relying on machine learning only to fine-tune the physical execution of the throw. This powerful synergy forms the basis of another spin-off, Flink Robotics, which enhances standard industrial robots with AI-powered image processing and physical models to perform complex sorting and packaging tasks.

From the Lab to the Real World Current Applications

The advanced concepts being developed in university labs are no longer confined to academic papers and proof-of-concept demonstrations. A new wave of technology transfer is bringing these learning-based robots into the commercial sphere, where they are beginning to solve tangible, real-world problems. The emergence of spin-off companies like Mimic Robotics and Flink Robotics from ETH Zurich is a clear indicator that this technology is reaching a level of maturity where it can deliver genuine value to industry.

These companies are applying the core principles of learned dexterity to tackle challenges in sectors like logistics and manufacturing. Flink Robotics, for instance, leverages its hybrid AI and physics models to upgrade existing industrial robot arms, giving them the ability to handle the complex and varied tasks of sorting and packaging parcels for clients like Swiss Post. Similarly, Mimic Robotics is commercializing the highly dexterous, bio-inspired hands that can learn complex manipulation tasks by observing humans. These ventures represent the critical bridge between cutting-edge research and practical application, proving that robots that learn are not a distant dream but an emerging reality.

Reflection and Broader Impacts

The development of robots with human-like dexterity and learning capabilities carries profound implications. On one hand, it promises to automate tasks previously thought to be exclusive to humans, boosting efficiency and opening up new possibilities in a variety of industries. On the other hand, it presents significant technical and societal challenges that must be addressed as these systems become more widespread. The journey toward truly general-purpose robots is as much about navigating these complexities as it is about technological innovation.

Reflection

The key strengths of this new robotic paradigm are its adaptability and versatility. By learning from demonstration and experience, these robots can operate in dynamic environments and handle a wide variety of objects, freeing automation from the rigid constraints of the factory floor. However, significant hurdles remain. The immense data requirements for training these models are a major bottleneck, and ensuring their reliability and safety in unstructured, high-stakes environments is a critical challenge. The ongoing debate over purely data-driven versus hybrid models highlights that the question of scalability is far from settled, and finding the right balance between learned intuition and programmed knowledge will be crucial for future progress.

Broader Impact

Looking forward, the potential applications for these dexterous robots are vast and transformative. In manufacturing, they could perform intricate assembly tasks. In healthcare, they could assist surgeons with delicate procedures or provide support for caregivers. In logistics, they could manage the complex sorting of goods in warehouses. Beyond these, their ability to operate in unpredictable environments makes them ideal candidates for hazardous roles, such as disaster response and search and rescue missions. Ultimately, the proliferation of these robots will reshape the landscape of human-robot collaboration, fostering a future where intelligent machines work alongside people not as mere tools, but as capable partners in a wide range of complex endeavors.

The Future is Humanoid Synthesizing Mind and Body

The journey of modern robotics reveals that creating truly capable machines requires more than just smarter algorithms. As the work at institutions like ETH Zurich has demonstrated, the future lies in the deep and inextricable synthesis of an intelligent, learning “brain” with an adaptive, bio-inspired “body.” The elegant efficiency of a musculoskeletal hand is not a separate component from the AI that controls it; rather, the two evolve together, each enabling the other to reach new heights of capability. This holistic approach, which treats mind and body as a unified system, is what will ultimately distinguish the robots of tomorrow from the automatons of the past.

This integrated vision guides the ongoing quest to build general-purpose robots—machines that can operate as effectively and safely in the complex human world as we do. The path ahead is challenging, demanding further breakthroughs in materials science, AI, and computational power. Yet, the progress made in mimicking the fundamental principles of human anatomy and learning has set a clear and compelling direction. The ultimate goal is no longer just to build a machine that can perform a task, but to create a partner that can learn, adapt, and interact with the world with a semblance of the grace and intuition that comes so naturally to us.