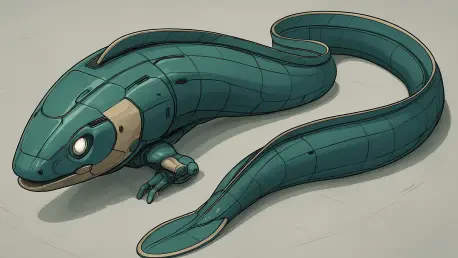

Diving into the fascinating intersection of nature and technology, we’re thrilled to speak with Oscar Vail, a renowned technology expert whose work pushes the boundaries of robotics and bio-inspired engineering. With a deep passion for emerging fields like quantum computing and open-source innovation, Oscar has been instrumental in exploring how natural systems can inspire groundbreaking robotic designs. Today, we’ll delve into his team’s latest research on eels—remarkable creatures with unparalleled locomotive abilities—and how their unique biology has informed the creation of a cutting-edge robot. Our conversation will cover the intricacies of eel movement, the role of sensory feedback in locomotion, the development of an adaptive robot, and the broader implications for robotics in navigating complex environments.

How did you first become fascinated by eels and their extraordinary ability to move in such diverse environments?

I’ve always been drawn to nature’s problem-solvers, and eels are a prime example. What struck me was their ability to glide through water with elegance and then, almost unbelievably, crawl across land. Unlike most vertebrates, they don’t rely solely on limbs or rigid structures for movement. Their elongated bodies and flexible neural control allow them to adapt seamlessly to different terrains. Even more astonishing is their resilience—eels can keep swimming even after a spinal cord injury, which would paralyze most other animals. That kind of adaptability screamed potential for robotics, and I knew we had to dig deeper into how they achieve it.

What specific characteristics of eels made them stand out as the ideal model for your robotic research over other animals?

We looked at a variety of creatures, like snakes and lampreys, which also have elongated bodies and unique movement patterns. But eels stood out because of their dual-environment mastery—water and land—and their ability to maintain locomotion even after severe neural disruption. Their reliance on sensory feedback, like stretch and pressure signals from their skin, to adjust movement in real-time was particularly intriguing. It’s a decentralized control system, not entirely dependent on brain input, which is a goldmine for designing robots that need to operate in unpredictable settings without constant oversight.

Can you explain the core discoveries your team made about how eels control their movement across different settings?

One of the biggest takeaways was how much eels depend on sensory feedback to navigate their world. Their skin senses stretch and pressure as they move, and these signals feed into a neural circuit in each body segment. Think of it as a built-in rhythm generator that keeps their undulating motion in sync, whether they’re swimming or crawling. This decentralized setup lets them adapt on the fly—speeding up, slowing down, or changing direction based on what their body feels. It’s a beautifully simple yet effective mechanism that doesn’t need constant brain commands, which is why they can keep moving even after injury.

How did you translate the eel’s sensory feedback system into the design of your new robot?

We focused on mimicking those stretch and pressure signals in the robot’s structure. We built a neural circuit model inspired by the eel’s Central Pattern Generator, embedding sensors along the robot’s body to detect deformation and contact forces. These sensors act like the eel’s skin, feeding data into the control system to adjust movement in real-time. For example, if the robot encounters resistance, the stretch feedback helps it push harder, much like an eel would. This setup allowed the robot to swim smoothly in water and crawl on land during our tests, adapting its gait based on the environment.

What were some of the challenges or obstacles the robot had to navigate in your experiments, and how did it handle them?

We threw a variety of challenges at it—uneven surfaces, barriers in water, and rough terrain on land. One test involved placing physical blocks in its path to see if it could maneuver around or over them. The stretch feedback was key here; it enabled the robot to sense resistance and generate extra thrust to push against or wiggle past the obstacle. It wasn’t perfect—there were moments of trial and error—but watching it adapt without pre-programmed instructions was a huge win. It showed us how powerful bio-inspired sensory feedback can be for autonomous navigation.

Your research also explored spinal cord injuries in eels. Can you share what you learned from those experiments and how they influenced the robot’s design?

Studying real eels with severed spinal cords was eye-opening. Even after such a drastic injury, they could still coordinate swimming movements because their body segments rely on local neural circuits and sensory feedback, not just brain signals. We mirrored this in simulations and robot tests by cutting off centralized control and letting the sensory-driven circuits take over. The robot maintained a surprising level of coordination across its body, syncing movements on either side of the ‘injury’ site. It confirmed that a decentralized, feedback-based system could make robots far more robust against damage or failure.

Looking ahead, how do you envision this eel-inspired robot impacting real-world applications in robotics?

The potential is massive, especially for environments that are tough to navigate or unpredictable. Think underwater exploration—searching shipwrecks or inspecting pipelines—where a robot needs to swim and maneuver around obstacles autonomously. On land, it could assist in disaster zones, crawling through rubble to locate survivors. The resilience inspired by eels also means these robots could keep functioning even if parts fail, which is critical for long-term missions in remote areas. We’re looking at systems that don’t just mimic nature but solve real human problems with that adaptability.

What is your forecast for the future of bio-inspired robotics, especially in terms of learning from creatures like eels?

I think we’re just scratching the surface of what bio-inspired robotics can achieve. Creatures like eels teach us that simplicity and decentralization can outperform complex, top-down control systems in dynamic environments. Over the next decade, I expect we’ll see a surge in robots that borrow from nature’s playbook—flexible, resilient, and energy-efficient designs that can tackle everything from environmental monitoring to medical applications. The key will be integrating more sophisticated sensory feedback and neural models, pushing us closer to machines that don’t just move like animals but ‘think’ like them in terms of adaptation. I’m excited to see where this journey takes us.