In an era where the demand for efficient and accurate data retrieval continues to grow, EraRAG stands out as a pioneering solution. Developed jointly by researchers from Huawei, The Hong Kong University of Science and Technology, and WeBank, EraRAG represents a sophisticated leap forward in graph-based retrieval technology. Traditional large language models (LLMs), while powerful, often struggle with up-to-the-minute information access and handling complex reasoning tasks. EraRAG addresses this challenge directly, offering a system designed for dynamic and ever-evolving data landscapes. Unlike conventional Retrieval-Augmented Generation (RAG) systems, which may falter under the strain of continuous data influx, EraRAG is inherently adaptable. It ensures efficient, scalable, and accurate information retrieval, crucial for environments where data changes frequently. This adaptability makes EraRAG a valuable tool for keeping LLM-backed applications accurate and responsive amidst rapidly shifting datasets.

Innovative Approach with Graph-Based Retrieval

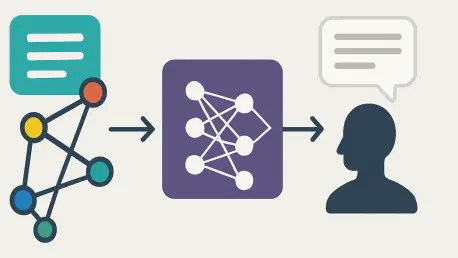

EraRAG’s most distinctive innovation lies in its use of Hyperplane-Based Locality-Sensitive Hashing (LSH), allowing for the efficient semantic grouping of corpus chunks. By transforming text passages into binary hash codes using random hyperplane projections, EraRAG facilitates streamlined retrieval processes. Furthermore, its hierarchical and multi-layered graph construction allows swift, localized updates in response to newly acquired data. When changes occur, only the affected segments require merging, splitting, or re-summarizing, significantly reducing computational and token costs, which can be up to 95% less than those incurred by other systems. This focus on localized and incremental changes ensures that EraRAG can maintain high performance levels without the overhead commonly associated with frequent data updates. The system’s ability to preserve initial hyperplane configurations guarantees reproducibility and determinism in bucket assignments, offering a level of consistency crucial for ongoing data processing applications.

EraRAG seamlessly integrates advanced processing capabilities with practical adaptability, making it a frontrunner in current graph-based query systems. A testament to its efficiency is demonstrated through performance studies where EraRAG consistently outperformed existing architectures across various question-answering benchmarks. The system’s multi-layer graph structure is adept at accommodating diverse query exigencies, efficiently retrieving both intricate factual information and broader semantic summaries. This dual capability embodies a strategic balance between retrieval efficiency and adaptability, making it an ideal choice for settings characterized by a constant influx of data, including news feeds, research archives, and user-driven platforms. The system thereby enables LLMs to remain factual, responsive, and trustworthy amidst a backdrop of evolving datasets, offering a level of reliability that is a rarity in fast-paced data environments.

Reducing Costs and Enhancing Scalability

EraRAG is not only a technological marvel in terms of data retrieval but also an economic asset by significantly reducing operational expenses. Its design optimizes retrieval processes, minimizing costs associated with graph reconstruction and token utilization. The meticulous architecture employed in EraRAG allows for substantial cost reductions compared to traditional systems without compromising on performance or reliability. This efficiency is especially vital as data environments become increasingly dynamic and complex, demanding solutions that can scale without a proportionate increase in operational costs.

The robustness of EraRAG’s design provides a scalable solution that enhances the reliability of language models in rapidly changing scenarios. By offering a system that scales with diminishing costs, EraRAG aids organizations in maintaining fiscal responsibility while adapting to the demands of modern data retrieval. As the digital ecosystem continues to grow, the need for systems like EraRAG that can adapt and assure consistent output becomes paramount. The architecture devised not only supports complex data queries but is engineered to handle large-scale retrieval operations with ease, thereby cementing EraRAG’s standing as a leader in the realm of dynamic data processing.

Future Potential and Considerations

EraRAG’s standout innovation is its use of Hyperplane-Based Locality-Sensitive Hashing (LSH) for semantic grouping of corpus chunks efficiently. By converting text into binary hash codes via random hyperplane projections, EraRAG streamlines retrieval. Its hierarchical graph allows for swift, localized updates upon receiving new data, with only affected segments needing reconfiguration, thus cutting token and computational costs by up to 95% compared to other systems. This emphasis on localized and incremental modifications ensures high performance without the common overhead linked to regular data updates. EraRAG preserves initial hyperplane setups, offering consistency vital for ongoing data processing applications. It’s a leader in graph-based query systems, as performance studies indicate it outpaces rival architectures in question-answering benchmarks. Its multi-layer graph structure efficiently retrieves detailed facts and broad semantic summaries, making it ideal for environments with continuous data inflow, such as news and research archives.