In a world increasingly reliant on robotics for everything from industrial automation to autonomous vehicles, the challenge of seamlessly integrating diverse sensors into robotic systems has long been a significant hurdle for developers and engineers. Traditionally, the process demanded extensive manual calibration and deep expertise, often slowing down innovation and deployment. However, a groundbreaking algorithm developed by a dedicated research team at the University of Klagenfurt has emerged as a game-changer. This cutting-edge solution automates the recognition and integration of various sensors into a robot’s navigation framework, eliminating the need for prior knowledge about specific sensor types. By simplifying a once complex and time-consuming task, this advancement promises to accelerate the adoption of robotic technologies across multiple sectors. The implications of such a development are profound, potentially reshaping how robots interact with their environments and adapt to new hardware configurations with unprecedented ease.

Breaking Down Barriers in Sensor Integration

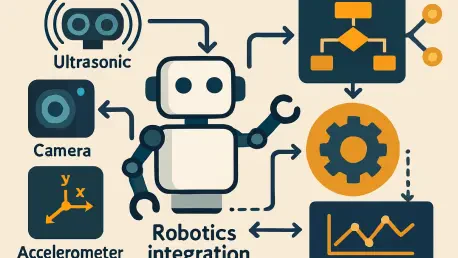

The core innovation of this algorithm lies in its ability to autonomously identify and model a wide array of sensors, such as cameras, accelerometers, and GPS modules, without requiring detailed input from human operators. Historically, integrating these components into a robot’s localization and navigation systems involved meticulous calibration, where each sensor’s unique characteristics had to be manually accounted for. This process often spanned days or even weeks, depending on the complexity of the system. Now, by processing what researchers describe as a “gray-box” sensor signal alongside a reliable system state, the algorithm determines the sensor’s type, position, and orientation automatically. This leap forward not only saves time but also democratizes access to advanced robotics by reducing the dependency on specialized knowledge. The result is a more inclusive field where smaller teams or organizations with limited resources can implement sophisticated robotic solutions without facing insurmountable technical barriers.

Moreover, the practical implications of this automated approach extend far beyond mere convenience, addressing a critical pain point in the robotics community. Evidence of this need is apparent in the thousands of requests for assistance with sensor integration on platforms like GitHub, where developers frequently seek guidance on calibration challenges. By offering a streamlined solution, the algorithm ensures that robots can adapt to new or replacement sensors with minimal downtime. This is particularly valuable in dynamic environments like autonomous vehicle testing or drone operations, where hardware changes are common. The ability to integrate sensors on the fly enhances the robustness of robotic systems, making them more resilient to hardware variations or failures. As a result, industries reliant on rapid deployment—such as logistics or emergency response—stand to benefit significantly from faster setup times and improved operational efficiency, marking a pivotal shift in how robotic technologies are developed and maintained.

Real-World Applications and Movement Requirements

One of the standout features of this algorithm is its applicability across diverse real-world scenarios, ensuring that it meets the needs of various robotic platforms, from quadcopters to self-driving cars. While the system does not require pre-existing knowledge of a sensor’s specifics, it does rely on movement to facilitate accurate recognition and modeling. This can be achieved through controlled settings, such as manually moving a device in a lab, or during active operations like a drone flight. The movement generates sufficient data for the algorithm to analyze the sensor’s behavior and integrate it effectively into the robot’s framework. This requirement, far from being a limitation, aligns seamlessly with the operational realities of most robotic systems, which are inherently designed for motion. Consequently, the algorithm proves to be a practical tool that can be implemented without necessitating significant changes to existing workflows or testing protocols.

Beyond its operational flexibility, the algorithm’s design ensures compatibility with a broad spectrum of sensor modalities, including vectors for magnetic fields or velocity, as well as measurements for 3 and 6 degrees of freedom (DoF). This versatility makes it a universal solution for robotics developers working on diverse applications, whether in industrial automation or consumer products. The ability to handle such a wide range of sensors positions this innovation as a cornerstone for future advancements in the field. For instance, manufacturers can now iterate on hardware designs more rapidly, knowing that new sensors can be integrated without extensive recalibration. Additionally, the reduced need for expert intervention lowers the barrier to entry for emerging markets or startups looking to leverage robotics. This democratization of technology fosters innovation by enabling a broader pool of talent to contribute to the evolution of autonomous systems, ultimately driving progress across multiple industries.

Paving the Way for Autonomous Robotics

Looking at the broader trend, this algorithm reflects a significant step toward greater autonomy in robotic systems, aligning with the industry’s push for self-configuring technologies. By automating sensor integration, the solution minimizes human involvement, allowing robots to adapt dynamically to hardware changes or upgrades. This capability is crucial for applications where time and adaptability are of the essence, such as disaster response robots that must operate with varying sensor setups depending on the mission. The reduction in calibration time also translates to cost savings, making robotic solutions more accessible to organizations with constrained budgets. As a result, the technology not only enhances efficiency but also supports scalability, enabling widespread adoption across sectors that previously found robotics prohibitively complex or expensive to implement.

Furthermore, the successful deployment of this algorithm in controlled and real-world tests has demonstrated its potential to redefine standards in robotic system design over recent years. Its ability to handle diverse sensor types through a generalized setup of transformations underscores a shift toward more flexible and resilient systems. The reliance on movement for sensor recognition has proven to be a practical approach, easily integrated into existing operational frameworks. Reflecting on these achievements, the research highlights a clear path forward: the robotics field must continue to prioritize solutions that reduce manual effort. Future considerations could include expanding the algorithm’s capabilities to handle even more complex sensor interactions or integrating machine learning to predict and optimize sensor performance. By building on this foundation, the industry can move closer to fully autonomous systems that self-adapt with minimal oversight, ensuring robotics remains at the forefront of technological innovation.