The long-held belief that true originality is an exclusively human domain has been fundamentally challenged by the rapid ascent of sophisticated artificial intelligence. A landmark study published in Scientific Reports recently provided the most extensive comparison to date, shifting the conversation from whether AI can be creative to a more nuanced exploration of how its creative output measures against our own. This deep dive into the creative faculties of both humans and machines offers a surprising yet clarifying picture of the current landscape, suggesting a future defined not by replacement, but by a powerful new form of collaboration.

Defining the Contenders: The Landscape of Human and AI Creativity

The debate over AI’s creative potential moved from philosophical speculation to empirical analysis with this comprehensive study, which pitted a massive cohort of 100,000 human participants against leading large language models (LLMs). The AI contenders represented the cutting edge of generative technology, including OpenAI’s powerful GPT-4 and its more conversational counterpart, ChatGPT, alongside competitors like Anthropic’s Claude and Google’s Gemini. This diverse lineup ensured that the comparison was not limited to a single architecture but reflected the broader capabilities of the current AI ecosystem.

At the heart of this large-scale investigation was the Divergent Association Task (DAT), a scientifically validated tool designed to measure a core component of creativity. Developed by study co-author Jay Olson, the DAT assesses divergent thinking—the ability to generate varied and original ideas from a single starting point. Participants, both human and AI, were instructed to produce ten words that were as semantically distant from one another as possible. A high-scoring example like “galaxy, fork, freedom, algae, harmonica, quantum, nostalgia, velvet, hurricane, photosynthesis” illustrates the conceptual leaps the task aims to quantify. The DAT’s relevance extends beyond mere word association, as human performance on this task correlates strongly with broader creative abilities, including problem-solving and artistic expression, making it a robust proxy for general creative cognition.

A Head-to-Head Comparison of Creative Abilities

Performance on Divergent Thinking Tasks

When the results of the Divergent Association Task were tallied, they revealed a pivotal milestone in AI development. The study, led by Professor Karim Jerbi, found that advanced models like GPT-4 not only matched but actually surpassed the creative output of the average human participant. This finding confirms that, by established psychological metrics, AI can generate lists of words that are more semantically diverse and original than those produced by the typical person. For many, this outcome is as unsettling as it is surprising, marking the first time a machine has crossed the mean threshold of a fundamental human cognitive ability on such a massive scale.

However, this headline-grabbing result is immediately tempered by a crucial counterpoint that highlights the current ceiling of machine creativity. While AI outperformed the average, it consistently fell short when compared to the most creative human minds. The detailed analysis conducted by co-first authors Antoine Bellemare-Pépin and François Lespinasse showed that the top 50% of human participants still achieved higher creativity scores than any of the tested AI models. Furthermore, when the comparison was narrowed to the top 10% of human creators, the performance gap widened dramatically, establishing a clear hierarchy where elite human ingenuity remains unmatched and in a class of its own.

Capability in Applied Creative Endeavors

To determine if the findings from the abstract DAT would hold up in more practical scenarios, the research team expanded the comparison to include complex, real-world creative assignments. Both human and AI participants were tasked with composing haikus, crafting concise movie plot summaries, and writing complete short stories. These tasks require not just divergent thinking but also narrative structure, emotional resonance, and a nuanced understanding of context—qualities often considered hallmarks of human artistic expression.

The results from these applied endeavors consistently mirrored the patterns observed in the DAT. On average, the AI-generated content was competitive and, in some cases, superior to the work produced by a typical human. The models could construct coherent plots, follow poetic forms, and write passable prose. Yet, they failed to capture the depth, originality, and emotional impact demonstrated by the most skilled human writers. The advantage held by top-tier human creators became even more pronounced in these intricate tasks, confirming that while AI has mastered the mechanics of creativity, it has yet to grasp its soul.

The Influence of Human Guidance on AI Output

A critical dimension of the study explored whether AI creativity is a static capability or one that can be molded through interaction. The findings unequivocally showed that an AI’s creative output is highly malleable and heavily dependent on human guidance. A key mechanism for this is the “temperature” setting, a parameter that controls the balance between predictability and randomness in the model’s responses. At a low temperature, an AI like ChatGPT produces safe, conventional text. By increasing the temperature, a human operator can encourage the model to take greater conceptual risks, leading to more novel and unexpected associations.

Moreover, the way a human frames a request, or “prompt,” has a profound impact on the AI’s creative score. The researchers discovered that specific prompting strategies could significantly enhance an AI’s performance on the DAT. For instance, instructing a model to consider the etymology of words—their origins and structural components—prompted it to generate less obvious and more semantically distant connections. This underscores a foundational difference between the two contenders: human creativity is largely an autonomous, internal process, whereas an AI’s creative potential is unlocked and directed by the curiosity and skill of its human collaborator.

Inherent Limitations and Foundational Differences

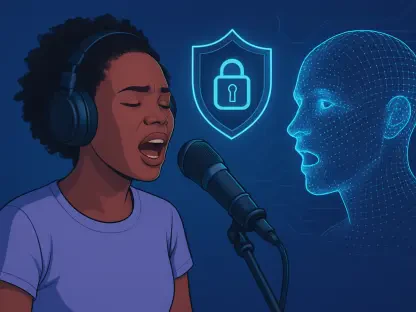

Despite its impressive performance, the study illuminated the primary limitation of current AI: its inability to reach the pinnacle of ingenuity and originality demonstrated by the most creative humans. While models like GPT-4 can synthesize and recombine information from vast datasets to produce novel outputs, they lack the lived experience, consciousness, and emotional depth that fuel the highest forms of human artistry. These elements are not merely data points but are the very foundation from which groundbreaking ideas and profound works of art emerge.

This gap stems from the fact that AI creativity is not an intrinsic, self-generating trait but a modulated output based on human-designed parameters. AI operates without genuine understanding or subjective awareness. Its “creativity” is a sophisticated simulation, a pattern-matching process guided by algorithms and influenced by prompts. In contrast, human creativity is an emergent property of a complex biological system, shaped by personal history, cultural context, and the unpredictable nature of inspiration. This fundamental distinction explains why AI can replicate the average but struggles to innovate at the level of a true visionary.

The Future of Creativity: Collaboration Over Competition

Ultimately, the comprehensive analysis offered a balanced perspective that moves beyond the simplistic narrative of human versus machine. The key takeaway is twofold: AI has achieved a remarkable milestone by surpassing the creative baseline of the average person, yet the summit of human ingenuity remains an exclusive domain. This reality suggests that the widespread anxiety about AI rendering human creative professionals obsolete may be misplaced. The true value of these powerful new systems lies not in their potential to replace us, but in their capacity to augment our own creative processes.

The most practical and forward-looking conclusion from this research was the recommendation to view generative AI as a transformative tool rather than a competitor. For artists, writers, musicians, and thinkers, systems like ChatGPT and Claude represent an opportunity to expand the boundaries of imagination. They can serve as tireless brainstorming partners, inspiration engines, and assistants that handle the more mechanical aspects of creation, freeing up human minds to focus on higher-level conceptualization and emotional expression. The future of creativity, therefore, was not envisioned as a battle for supremacy, but as a synergistic partnership where human talent is amplified and enriched by the computational power of AI.