Translating the complex, often ambiguous language of human creativity into the precise instructions required for robotic assembly represents one of the final frontiers in automated design. Researchers at the Massachusetts Institute of Technology (MIT), in collaboration with Google Deepmind and Autodesk Research, have developed a groundbreaking AI-driven robotic system capable of fabricating multicomponent physical objects directly from natural language prompts. This innovative framework aims to fundamentally reshape design and manufacturing by making the creation of tangible items, such as custom furniture, as straightforward as issuing a simple verbal command. The system marks a significant departure from traditional design tools, which often present steep learning curves and significant barriers to rapid, iterative development. By seamlessly integrating generative artificial intelligence with robotics, this research pioneers an end-to-end pipeline that not only interprets a user’s creative intent but also physically materializes it through a collaborative co-design process.

From Abstract Idea to Physical Reality

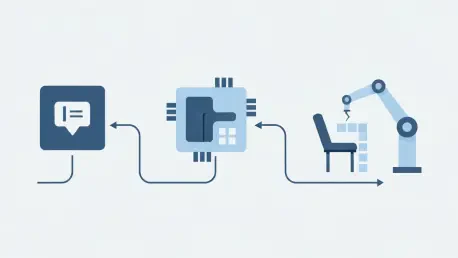

The central focus of this research is a sophisticated, multi-stage system that successfully bridges the vast chasm between language and physical reality. The process commences when a user provides a simple text prompt, which is then processed by a generative AI model to produce a foundational 3D digital representation of the object, known as a mesh. This initial output, however, is purely geometric and lacks the crucial component-level detail necessary for a robot to begin assembly. This limitation highlights a common challenge in generative 3D modeling, as segmenting a continuous shape into discrete, functional parts requires an understanding that extends beyond form to encompass intended use.

This study directly addresses the profound challenge of translating abstract human ideas into detailed, functional, and physically assembled objects. The system is engineered to overcome the inherent ambiguity of language, where a single prompt like “a chair” can correspond to an almost infinite number of designs. It accomplishes this by not only generating a plausible shape but also imbuing it with a logical structure that can be physically realized. This work represents a critical step toward a future where the barrier between imagination and creation is significantly lowered, allowing for a more direct and intuitive flow from conceptual thought to a tangible product.

Revolutionizing Design and Manufacturing

For decades, the creation of physical objects has been dominated by complex computer-aided design (CAD) software and manufacturing processes that demand specialized expertise. These traditional tools, while powerful, impose a steep learning curve that excludes most non-experts from the design process. Moreover, they often limit the potential for rapid iteration, as each design change requires significant manual effort and technical knowledge, slowing down the creative cycle and increasing development costs. This paradigm has historically confined advanced design and fabrication to professional and industrial settings.

The research’s importance lies in its potential to democratize creation, making design and fabrication more accessible, intuitive, and sustainable for everyone. By enabling users to create with natural language, the system eliminates the need for technical proficiency in complex software, empowering individuals to design and build custom objects tailored to their specific needs and preferences. This points toward a future of decentralized, on-demand manufacturing, where items can be produced locally or even in the home. Such a shift could disrupt traditional supply chains that rely on mass production and global shipping, fostering a more personalized and resource-efficient ecosystem.

Research Methodology, Findings, and Implications

Methodology

The system’s methodology unfolds across a multi-stage pipeline that begins with a generative AI model creating a 3D mesh from a user’s text prompt. To overcome the limitations of this initial geometric model, researchers introduced a Vision-Language Model (VLM), which serves as the intelligent core of the system. Pre-trained on vast quantities of images and text, the VLM possesses a nuanced understanding of how objects are constructed and used. It meticulously analyzes the 3D mesh’s geometry and reasons about its functionality, deciding where to place prefabricated parts like structural beams and surface panels based on its understanding of the object’s purpose.

A key innovation of this system is the integration of a human-AI co-design process. The AI does not operate in isolation; rather, it engages in an iterative dialogue with the user. After the VLM proposes an initial design, the user can provide natural language feedback to refine it, such as “make the backrest taller” or “use fewer panels on the seat.” This collaborative approach navigates the vast design space effectively, empowering users with a sense of control and ownership over the final product.

Once the digital design is finalized, the system transitions from the digital to the physical realm. A robotic system takes the finalized blueprint and assembles the object using a library of prefabricated, modular, and reusable components. This final stage underscores the system’s commitment to sustainability. Because the components are designed to be easily disassembled and reconfigured, they can be repurposed for new creations, drastically reducing the material waste associated with traditional prototyping and manufacturing methods.

Findings

To validate the system’s effectiveness, the researchers conducted a comprehensive user study. The designs generated by the VLM-powered system were compared against objects created by two alternative, less intelligent algorithms. The results were compelling, with over 90% of study participants expressing a clear preference for the objects designed by the new AI-driven system, citing their superior functionality and aesthetic coherence. This finding provides strong quantitative evidence of the VLM’s advanced design capabilities.

Beyond user preference, the study probed the VLM’s ability to articulate the functional reasoning behind its design choices. When prompted, the model could explain that it placed panels on a chair’s seat and backrest because those areas are intended to support a person. This confirmed that its decisions were based on a learned understanding of an object’s purpose, not merely on simple geometric rules or random chance. The research culminated in a successful end-to-end demonstration, proving the system’s ability to interpret language, reason about function, incorporate human feedback, and physically construct a complete object.

Implications

The immediate practical applications of this technology are significant, particularly for rapid prototyping in industries like aerospace and architecture. In these fields, iterating on physical models is often a time-consuming and expensive bottleneck. This system offers a way to quickly and affordably produce and test physical designs, accelerating innovation and reducing development cycles. It allows engineers and designers to move from concept to physical prototype with unprecedented speed.

The broader societal impact of lowering the barrier to fabrication could be transformative. The research envisions a future where such systems enable the in-home creation of custom furniture and other household items, tailored perfectly to an individual’s space and taste. This could disrupt traditional supply chains that depend on shipping bulky, mass-produced goods from centralized factories, paving the way for a more localized, personalized, and responsive manufacturing ecosystem. Furthermore, the emphasis on using reconfigurable, modular parts presents a more sustainable model for production and consumption, as it minimizes material waste by promoting the reuse of components across different designs.

Reflection and Future Directions

Reflection

A primary challenge addressed by the research was the complex task of segmenting a continuous 3D mesh into a set of discrete, functional components that a robot could assemble. The VLM was specifically engineered to solve this problem by leveraging its vast knowledge base to infer function from form. By reasoning about how an object is used, the VLM could intelligently partition the abstract geometry into a logical, buildable structure, a capability that represents a significant leap beyond simple geometric processing.

The integration of a human-in-the-loop co-design process proved to be another critical element of the system’s success. The design space for any given prompt is enormous, and relying solely on AI could lead to results that do not align with a user’s specific intent or aesthetic preferences. By allowing for iterative feedback, the system empowers users, giving them a meaningful sense of agency and ownership over the creative process. This collaborative model ensures the final product is not just functional but also personally satisfying.

Future Directions

Looking ahead, the research team aims to enhance the system’s capabilities to understand more nuanced and complex user prompts. A key goal is to enable the AI to reason about specific materials, allowing a user to request an object like “a table made of glass and metal.” This would require the model to understand not only form and function but also the physical properties and assembly constraints associated with different materials.

Another promising avenue for future work involves expanding the library of prefabricated parts to include dynamic components. By incorporating elements like gears, hinges, and motors, the system could move beyond static objects like chairs and tables to create items with more complex functionality, such as adjustable desks, kinetic sculptures, or simple robotic assistants. This expansion would significantly broaden the range of objects that users could design and fabricate on demand.

The Dawn of Accessible, On-Demand Fabrication

This research represented a foundational step toward a new paradigm where anyone could turn an idea into a physical reality through simple language commands. The successful integration of generative AI, functional reasoning, human-AI collaboration, and robotic assembly demonstrated a complete pipeline from abstract thought to tangible object. This work confirmed that advanced AI models can do more than just generate images or text; they can be endowed with the practical intelligence needed for real-world fabrication.

The findings paved the way for a more intuitive, sustainable, and personalized manufacturing ecosystem. By removing the technical barriers that have long defined the field of design, this system offered a glimpse into a future where creativity is the only prerequisite for making. The study’s contribution was a significant leap forward in making advanced design and fabrication tools accessible to all, heralding a new era of personal manufacturing and distributed innovation.