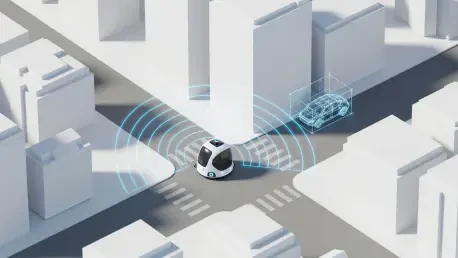

A new radar system developed by researchers at Penn Engineers is poised to solve one of the most persistent and dangerous challenges for autonomous vehicles by giving them the ability to see what is hidden around corners. This innovative technology, named HoloRadar, enables driverless cars and robots to perceive objects and reconstruct entire scenes that lie outside their direct line of sight, a capability known as non-line-of-sight (NLOS) perception. By providing a crucial window into occluded areas, this system addresses a fundamental safety limitation in current autonomous technology, promising a future where machines can navigate complex urban environments and industrial settings with a far greater degree of proactive awareness and safety.

From Reactive to Proactive Safety

Overcoming Line-of-Sight Limitations

The full-scale deployment of autonomous vehicles has been persistently hampered by their inability to anticipate hazards that are not directly visible, a constraint inherent in their primary sensory systems. Current sensor suites, dominated by technologies like LiDAR and cameras, operate on the principle of direct line of sight. LiDAR pulses light waves and measures the reflections to build a precise 3D map, while cameras capture visual data; both are rendered useless by physical obstructions like buildings, other vehicles, or even dense foliage. This limitation forces an autonomous system into a fundamentally reactive posture, only able to respond to a threat once it becomes visible. For instance, a child chasing a ball into the street from between two parked cars or a vehicle speeding through a blind intersection presents a scenario where reaction time is minimal, even for an advanced AI. HoloRadar is engineered to shift this paradigm by providing the crucial forewarning needed to transform the vehicle’s decision-making process from a reactive one to a proactively safe model, granting the system the precious seconds it needs to anticipate, plan, and execute a safe maneuver well before a hazard enters its direct field of view.

The integration of NLOS perception marks a pivotal evolution in the concept of a vehicle’s situational awareness, expanding it beyond the immediate and visible to the probable and imminent. Rather than serving as a replacement for existing sensors, HoloRadar acts as a powerful augmentation, creating a more holistic and resilient perception system. This approach aligns with the industry’s move toward sophisticated sensor fusion, where data streams from multiple, diverse sensor types are intelligently combined. In such a fused system, LiDAR provides high-resolution geometric detail, cameras offer color, texture, and object classification (like identifying a pedestrian or a stop sign), and HoloRadar contributes its unique ability to map out hidden spaces. The vehicle’s central processing unit can then synthesize this multifaceted information into a comprehensive, four-dimensional model of its surroundings, which includes the critical element of predicting the movement of unseen objects. This layered sensory input dramatically reduces the system’s vulnerability to the failure of any single sensor type and, more importantly, mitigates the inherent blindness that has long been the Achilles’ heel of autonomous navigation.

Turning a Weakness into a Strength

The ingenuity behind HoloRadar lies in its counterintuitive application of radio waves, leveraging a property once considered a limitation for imaging and turning it into a distinct advantage for NLOS perception. The research team, led by Assistant Professor Mingmin Zhao, recognized a fundamental difference between light and radio signals. Visible light, with its extremely short wavelength, is ideal for creating high-resolution images but scatters insignificantly, traveling in straight lines and being easily blocked. Radio signals, conversely, possess much longer wavelengths. While this property makes it challenging to generate finely detailed images akin to a photograph, it is exceptionally well-suited for interacting with large, everyday surfaces. The researchers theorized that for radio waves, common environmental structures like building facades, asphalt roads, and concrete walls are, electromagnetically speaking, remarkably smooth. This smoothness causes them to act as large, diffuse mirrors, reflecting radio signals in predictable patterns rather than absorbing or scattering them chaotically.

This principle effectively transforms the entire physical environment into a dynamic, passive sensor array for the HoloRadar system. Unlike specialized mirrors or repeaters that would need to be installed throughout a city, this technology utilizes the existing infrastructure as-is. As the autonomous vehicle moves, it emits radio signals that propagate outward, bouncing off nearby surfaces. Some of these signals travel around corners, strike hidden objects, and then reflect back along a similar, multi-bounce path to the vehicle’s receiver. The system essentially enlists the surrounding architecture to do the work of peering around the corner. A wall at an intersection is no longer just an obstacle; it becomes a reflective surface that can relay information about oncoming traffic. This elegant solution is both cost-effective and universally applicable, as it does not depend on any external modifications to the environment, allowing any vehicle equipped with HoloRadar to benefit from NLOS perception in any location, from a structured warehouse to an unpredictable city street.

The Mechanics of Seeing the Unseen

AI-Powered Environmental Reconstruction

The operational core of HoloRadar is a sophisticated synergy between hardware that captures faint radio echoes and advanced artificial intelligence that deciphers their meaning. The process begins with the system transmitting radio waves and then listening for the returning signals, many of which have traveled complex paths around obstructions and are incredibly weak by the time they are received. These raw signals are essentially a jumble of scattered, time-delayed wave information, containing no obvious image. This is where the AI algorithms become critical. Trained on vast datasets, the AI meticulously analyzes the characteristics of the returning waves—their timing, Doppler shift, and intensity—to solve a complex inverse problem. It effectively works backward from the scattered data to reconstruct the most probable three-dimensional geometry of the hidden scene that could have produced those specific reflections. This computational feat is analogous to determining the shape and layout of an unseen room by listening to the echoes of a single clap.

The output of this process is not a photorealistic image but a functional 3D map of the occluded space, highlighting the presence, location, and even the general motion of objects within it. The system can distinguish between the static geometry of a hidden corridor and the dynamic presence of a person walking within it. This is far more powerful than a simple binary alert; it provides the autonomous vehicle with a spatial understanding of the unseen environment. For instance, the system would not only detect a car approaching a blind intersection but could also estimate its trajectory and speed based on the changing radio wave reflections over time. This reconstructed “ghost” image of the hidden scene is then fed into the vehicle’s primary decision-making engine, where it is integrated with line-of-sight data from other sensors. The result is a vastly enriched and more complete perception of the world, enabling the vehicle to make smarter, safer, and more human-like decisions when navigating complex environments where what you cannot see is often more important than what you can.

All-Weather and All-Lighting Capability

One of the most significant practical advantages of HoloRadar’s radio wave-based approach is its intrinsic robustness in adverse environmental conditions, a domain where existing optical sensors frequently falter. Systems that rely on visible light, such as standard cameras, are severely degraded by darkness, fog, heavy rain, or snow, as these conditions either obscure light or introduce visual noise that confuses image-processing algorithms. Even LiDAR, which uses its own light source in the infrared spectrum, can be affected by atmospheric absorption and scattering from particles like fog droplets or snowflakes, reducing its effective range and accuracy. HoloRadar, by contrast, operates using radio frequencies that are largely unhindered by such atmospheric phenomena. Radio waves pass through fog, rain, and darkness with negligible attenuation, allowing the system to maintain its perceptual capabilities around the clock and in nearly any weather condition. This reliability is not merely a convenience; it is a critical safety requirement for any autonomous system intended for widespread, real-world deployment.

This inherent resilience makes HoloRadar a far more practical and dependable solution for achieving consistent NLOS perception than previous experimental methods that attempted to use scattered visible light. Those earlier light-based systems required highly sensitive cameras capable of detecting single photons and were extremely susceptible to ambient light pollution, such as stray headlights or street lamps, which could easily overwhelm the faint signal. HoloRadar’s immunity to these challenges ensures that an autonomous vehicle maintains its crucial ability to see around corners whether it is driving on a clear sunny day or through a dense fog at night. This all-condition operational envelope means that the enhanced safety provided by NLOS perception is not a fair-weather feature but a constant, reliable layer of protection. It ensures that the vehicle’s proactive safety systems remain fully functional precisely when they are needed most—when environmental conditions make driving most hazardous for both humans and machines.

From Lab to Road

Successful Real-World Demonstrations

The viability of HoloRadar was rigorously validated through a series of demanding real-world tests that moved the technology beyond theoretical models and into practical application. To conduct these trials, the research team integrated the complete system onto a mobile robotic platform, effectively creating a miniature autonomous vehicle. This robot was then deployed in a variety of complex indoor environments, such as office buildings with long hallways and sharp, blind corners, which served as excellent proxies for challenging urban intersections. In these experiments, the system was tasked with navigating while simultaneously mapping its surroundings, including areas outside its direct line of sight. The results were compelling, as HoloRadar successfully demonstrated its ability to reconstruct the geometry of hidden corridors with a high degree of accuracy. More critically, the system consistently detected and mapped the presence of human subjects who were intentionally positioned in these occluded areas, providing the robot with a clear “image” of a person waiting just around the corner.

These successful trials served as an essential proof-of-concept, confirming that the underlying principles of using environmental surfaces to reflect radio waves and AI to interpret the signals were sound and effective in practice. The ability to not only detect an object but to accurately locate it within a reconstructed 3D map of a hidden space represented a significant milestone. It proved that the technology could provide actionable spatial intelligence, not just a simple proximity alert. This level of detail is crucial for an autonomous vehicle’s path-planning and decision-making algorithms, as it allows the system to understand the context of a hidden object—for example, whether a pedestrian is standing still or moving toward the vehicle’s path. The initial demonstrations provided strong empirical evidence that HoloRadar has the potential to fundamentally enhance the perceptual capabilities of autonomous machines and established a solid foundation for the next phase of development: scaling the technology for the even greater complexities of the outdoor world.

Bridging the Gap to Commercial Viability

The research team’s initial successes with HoloRadar represented a foundational breakthrough, establishing that leveraging environmental radio wave reflections processed by AI was a highly effective method for achieving non-line-of-sight perception. While the system proved its capabilities in controlled indoor settings, the focus of future work has shifted toward adapting and scaling the technology for more dynamic and expansive outdoor scenarios. The challenges inherent in outdoor environments are substantially greater; longer distances between the vehicle and reflective surfaces can weaken the radio signals, potentially reducing the clarity and range of the NLOS “vision.” Furthermore, the urban landscape is a far more complex and chaotic radio environment, filled with numerous moving objects—other cars, cyclists, pedestrians—each creating its own complex reflections that the AI must learn to disentangle and interpret correctly.

Overcoming these hurdles became the central objective for preparing HoloRadar for integration into commercial driverless cars and other outdoor autonomous systems. Research efforts are now concentrated on developing more powerful transmitters and more sensitive receivers to compensate for signal attenuation over greater distances. Concurrently, the AI algorithms are being refined and trained on more complex datasets captured from real-world city streets to improve their ability to filter out noise and accurately identify multiple moving targets in a cluttered scene. The successful transition of this technology from the laboratory to the open road hinged on solving these engineering and computational challenges. The work that was accomplished laid the groundwork for a new generation of autonomous vehicles, making them significantly safer and more aware of their surroundings by finally giving them the ability to see the unseen.