Diving into the fascinating world of robotics and artificial intelligence, I’m thrilled to sit down with Oscar Vail, a trailblazer in technology with a deep focus on cutting-edge fields like quantum computing, robotics, and open-source innovation. Today, we’re exploring his insights on Physical AI, particularly the groundbreaking TactileAloha system, which integrates sight and touch to revolutionize how robots interact with the world. Our conversation touches on the challenges of mimicking human sensory capabilities, the practical applications of this technology, and the future of multimodal AI systems in everyday life.

Can you explain what Physical AI is and how it stands apart from other forms of AI that people might already know about?

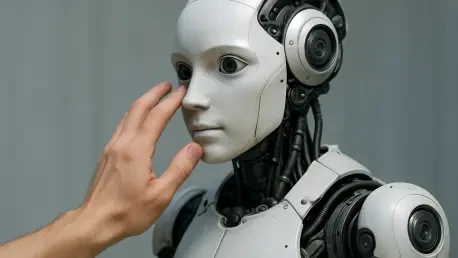

Physical AI is all about enabling machines to interact with the physical world in a way that mirrors human capabilities. Unlike traditional AI, which often focuses on data processing or digital tasks like image recognition or language generation, Physical AI is geared toward real-world manipulation. It combines sensory inputs like sight and touch to let robots handle objects with precision and adaptability. Think of it as giving robots a sense of awareness and dexterity that goes beyond just crunching numbers or following pre-programmed steps.

What sparked the idea to integrate both sight and touch in a system like TactileAloha?

The inspiration came from observing how humans naturally rely on multiple senses to perform even simple tasks, like picking up a coffee cup. We noticed that robots, when limited to just visual data, often struggled with tasks requiring fine motor skills or material differentiation. Combining sight and touch in TactileAloha was a way to bridge that gap, allowing robots to not just see an object but also feel its texture or orientation, making their actions much more intuitive and effective.

Were there specific real-world problems or tasks that made this combination of senses feel essential?

Absolutely. Tasks like handling Velcro or zip ties were perfect examples. These objects have subtle differences in texture or alignment that are hard to detect through vision alone. A robot might see the Velcro but not know which side is the hook or loop without feeling it. In real-world scenarios, like manufacturing or even household chores, these small details matter, and relying on just one sense often led to errors or inefficiencies.

How does TactileAloha actually work when tackling a complex task like fastening Velcro?

TactileAloha operates in a layered process. It starts with visual input from cameras to locate and grip the Velcro ends. Then, tactile sensors come into play, feeling the texture to determine the orientation—whether it’s the hook or loop side. Based on that feedback, the system adjusts the robot’s posture and angle to align the pieces correctly. Finally, it presses them together to ensure a secure connection, using touch to confirm the firmness. It’s a dynamic interplay of seeing and feeling that adapts to the situation in real time.

Why do robots find it so challenging to rely solely on visual data, and how does adding touch change the game?

Vision alone can be deceiving for robots because it lacks depth in understanding physical properties like texture or resistance. A camera might capture an image of Velcro, but it can’t tell which side is which or how much pressure to apply. Touch adds a layer of feedback that lets the robot make real-time adjustments, much like how we might feel if something is slipping from our grasp. It fills in the gaps that vision can’t cover, making the robot’s actions more precise and reliable.

Can you share an example of a task where touch proves more valuable than sight for a robot?

Sure, consider something like buttoning a shirt. Visually, a robot might locate the button and hole, but without touch, it can’t sense if the button is properly aligned or if it’s caught on fabric. Tactile feedback lets the robot feel the resistance or smoothness as it pushes the button through, ensuring the task is completed correctly. It’s those subtle cues that sight alone often misses.

You built TactileAloha on the foundation of Stanford’s ALOHA system. Can you tell us what that original system was designed for and how you’ve enhanced it?

The ALOHA system was a fantastic open-source platform for bimanual teleoperation, meaning it allowed remote control of dual-arm robots for various tasks at a low cost. It was versatile and accessible, but it relied heavily on visual input. With TactileAloha, we enhanced it by integrating tactile sensing, enabling the robot to make decisions based on texture and physical feedback, not just what it sees. This addition made the system far more adaptive, especially for intricate tasks requiring both hands to coordinate.

How did working with an open-source platform influence the development of TactileAloha?

Open-source platforms like ALOHA are a game-changer because they provide a solid starting point and foster collaboration. We didn’t have to build everything from scratch, which saved time and resources. Plus, being part of a community meant we could tap into shared knowledge and iterate quickly based on feedback. It shaped our research by keeping us focused on innovation—adding tactile capabilities—rather than reinventing the wheel.

What kinds of everyday tasks or scenarios do you envision TactileAloha being used for?

I see TactileAloha being incredibly useful in tasks that require precision and adaptability. In everyday life, it could assist with household chores like folding laundry, cooking, or assembling furniture—tasks where feeling the material is just as important as seeing it. Beyond the home, industries like healthcare could benefit from robots aiding in delicate procedures, or manufacturing could use them for assembling small, complex components. The potential is vast.

Looking ahead, what’s your forecast for the future of multimodal Physical AI systems like TactileAloha?

I’m really optimistic about where this is headed. In the next decade, I think we’ll see multimodal Physical AI become more integrated into our lives, with systems that not only combine sight and touch but also incorporate hearing and other senses for even richer interactions. As the technology gets more affordable and accessible, we could have robots seamlessly assisting in homes, hospitals, and factories. The challenge will be ensuring these systems are safe and intuitive, but I believe we’re on the cusp of a major shift where robots truly become partners in our daily routines.