A landmark development in artificial intelligence has been achieved, moving beyond the confines of theoretical calculations and classical simulations to demonstrate the generative power of Quantum Language Models (QLMs) on real, operational quantum hardware. This achievement signifies a critical transition for quantum AI, establishing a tangible engineering foundation for the burgeoning field of quantum natural language processing (QNLP). For years, the promise of quantum computing in AI has been a distant vision, but this validation on actual quantum processors represents a significant leap forward. It signals the beginning of a new era where AI systems could possess capabilities far exceeding those of today’s most advanced classical models, potentially redefining the landscape of computation and intelligence. The successful execution of these complex models on noisy, early-stage quantum devices is not just a proof of concept; it is the first concrete step toward unlocking unprecedented power and efficiency, setting the stage for the next paradigm shift in how machines understand and generate human language. This move from abstract theory to applied reality has captured the attention of the global tech community, heralding both immense opportunities and formidable new challenges on the path to true quantum advantage.

The Technical Milestone: From Theory to Tangible Reality

Demonstrating a New Class of AI on NISQ Devices

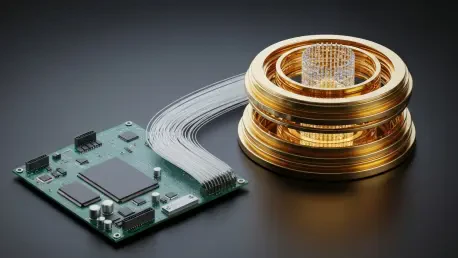

The core of this groundbreaking advancement lies in the successful end-to-end training and operation of complex sequence models, such as Quantum Recurrent Neural Networks (QRNNs) and Quantum Convolutional Neural Networks (QCNNs), directly on today’s Noisy Intermediate-Scale Quantum (NISQ) devices. Researchers have definitively shown that these quantum models can learn intricate sequential patterns from data and perform generative tasks, a crucial proof of concept for the practical application of quantum natural language processing. These pivotal demonstrations were not confined to idealized simulations but were conducted on leading-edge quantum hardware, including several of IBM Quantum’s powerful processors like ibm_kingston and the newer, more advanced Heron r2, as well as on Quantinuum’s ## trapped-ion quantum computer. By proving that these models can function effectively despite the inherent noise and limitations of current quantum systems, these experiments have established a vital baseline for future development. This validation serves as irrefutable evidence that quantum circuits can be architected and trained to handle the nuanced, sequential nature of language, moving QNLP from a theoretical discipline into an active, experimental field of engineering.

The Rise of Hybrid Quantum-Classical Architectures

This new era of practical quantum AI is being defined by the development of sophisticated hybrid quantum-classical language models, which strategically combine the strengths of both computational paradigms. Notable examples include innovative quantum transformer models, such as Quantinuum’s “Quixer,” which has been specifically tailored for quantum architectures and has demonstrated competitive results on realistic language modeling tasks while optimizing for qubit efficiency. Another significant development is Chronos-1.5B, a large language model whose quantum component was meticulously trained on IBM’s Heron r2 processor to perform specific NLP tasks like sentiment analysis with enhanced accuracy. Further research has shown that quantum-enhanced attention mechanisms, a core component of modern language models, can significantly reduce the computational complexity inherent in processing long sequences of text, enabling more nuanced and contextually aware machine comprehension. Furthermore, emerging quantum diffusion models are ingeniously exploiting the intrinsic noise of real IBM quantum hardware—a factor typically seen as a hindrance—to perform generative tasks like image generation, paving the way for large-scale quantum generative AI that turns a system’s weakness into a functional asset.

Leveraging Unique Quantum Phenomena for Language

This quantum approach differs fundamentally from classical AI, which operates on the principles of classical probability distributions and vast matrix multiplications. In contrast, QLMs on hardware leverage unique quantum phenomena such as superposition, entanglement, and quantum interference to process information. Superposition allows a qubit to represent both a 0 and a 1 simultaneously, enabling the model to explore a multitude of linguistic possibilities at once. Entanglement creates deep, non-local correlations between qubits, which can be used to model the complex, long-range dependencies found in human language that classical models often struggle to capture. These properties allow for potentially more expressive and compact representations of intricate linguistic structures and the capacity to explore exponentially larger computational spaces than classical computers. While current implementations often use a surprisingly small number of qubits—with some studies showing competitive performance with as few as four—the power lies in the exponential scaling of quantum information. The immediate practicality on NISQ hardware necessitates a focus on hybrid designs, where computationally intensive or specific parts of a task are strategically offloaded to quantum processors to seek a “quantum advantage,” while robust classical systems handle the rest of the workflow.

Industry Reaction and the Pragmatic Path Forward

A Compelling Blend of Excitement and Caution

The initial reaction from the global AI research community and industry experts has been a compelling blend of palpable excitement and cautious optimism. There is widespread enthusiasm for the successful transition of quantum algorithms from theoretical equations and simulations to operational performance on actual quantum hardware, with natural language processing emerging as a primary and highly promising application area. This achievement is seen as a critical validation that the long-term vision of quantum AI is not merely speculative but is beginning to yield concrete, measurable results. The ability to run generative models on real machines, however noisy and limited, is a watershed moment that invigorates researchers and investors alike. It suggests that the immense theoretical potential of quantum mechanics to revolutionize computation is finally starting to manifest in one of the most challenging and impactful domains of artificial intelligence. This progress provides a powerful incentive for increased investment in both quantum hardware and the software ecosystem required to support it.

Acknowledging the Limitations of the NISQ Era

This excitement, however, is tempered by a pragmatic recognition of the significant limitations of current NISQ-era devices. Experts widely acknowledge that today’s quantum computers suffer from high error rates, short qubit coherence times, limited total qubit counts, and restricted qubit connectivity between processors. These hardware constraints mean that while recent demonstrations show immense potential, achieving “generative performance” that is comparable to, let alone superior to, large-scale classical LLMs like GPT-4 for complex, open-ended tasks remains a distant goal. The noise inherent in current systems can easily corrupt delicate quantum computations, and the small number of available high-quality qubits restricts the complexity of the problems that can be addressed. Consequently, there is a strong consensus that the hybrid quantum-classical model represents the most promising and practical frontier for the near to medium term. This approach is viewed as an essential bridge, allowing the field to harness the nascent power of quantum processors for specific, well-defined tasks while relying on mature classical infrastructure for the bulk of the computational workload, thereby paving a viable path toward true quantum advantage as the underlying hardware continues to mature.

Reshaping the AI Industry: Corporate and Competitive Implications

A Transformative Shift in the Competitive Landscape

The advent of functional QLMs is poised to instigate a transformative shift across the artificial intelligence industry, redrawing competitive landscapes and creating unprecedented strategic opportunities. This breakthrough is expected to fundamentally alter the operational and developmental paradigms for AI companies by accelerating research and development, enhancing model performance in specific domains, and potentially reducing the immense energy consumption and associated costs of training complex classical models. This technological shift will create distinct opportunities for various players in the ecosystem. Quantum hardware providers—including established giants like IBM, Google, and Microsoft, and specialized firms like IonQ, Quantinuum, Rigetti Computing, and D-Wave—are positioned to benefit directly from the increased demand for their machines and cloud-based quantum computing services. Concurrently, quantum software and algorithm developers such as Multiverse Computing, SandboxAQ, and SECQAI will thrive by creating the specialized platforms, tools, and algorithms required to build and deploy these novel QLMs. Hybrid solution providers like QpiAI are seen as particularly crucial in the near term, as they will supply the essential technologies that bridge classical and quantum systems, making this new power accessible to a broader market.

Strategic Imperatives for AI Leaders and Startups

This new technological frontier creates distinct strategic imperatives for both established AI leaders and agile startups. Traditional AI powerhouses like Google, Microsoft, Amazon, and IBM, which have already made substantial investments in both AI and quantum computing, are in a prime position to rapidly adopt, scale, and integrate QLMs into their extensive portfolios of cloud services, search engines, and enterprise solutions. Their vast resources and existing infrastructure give them a significant advantage in pioneering this integration. Meanwhile, AI-native startups like OpenAI and Anthropic will face immense pressure to adapt quickly. They must either invest heavily in their own quantum R&D programs, a costly and long-term endeavor, or more likely, form strategic partnerships with quantum specialists and aggressively acquire talent to avoid being technologically outpaced. For vertical AI specialists in sectors like healthcare, finance, and materials science, specialized QLMs offer immense potential for solving domain-specific challenges in molecular modeling, financial fraud detection, and complex risk assessment that are intractable for classical computers. The competitive landscape will inevitably transform, with companies that successfully deploy generative QLMs gaining a substantial first-mover advantage. This emerging “quantum advantage” could also widen the technological gap between nations, elevating quantum R&D from a commercial pursuit to a matter of strategic national importance.

A New Paradigm: The Broader Significance for Artificial Intelligence

Redefining the Future of AI Development

This milestone solidifies the move toward hybrid quantum-classical computing as a major, defining trend in the AI landscape. It represents a fundamental rethinking of how machines process and interpret human knowledge, offering a potential path to overcome the escalating computational demands, high costs, and significant environmental impact associated with training massive classical LLMs. Many experts consider this development a “game-changer,” potentially driving the “next paradigm shift” in AI, with a significance comparable to the “ChatGPT moment” that redefined public and commercial perceptions of AI capabilities. The successful performance of QLMs promises a range of transformative impacts. It could lead to dramatically accelerated training times for LLMs, potentially reducing cycles that currently take weeks down to mere hours, while also making the entire process more energy-efficient and sustainable. This is not merely an incremental improvement but a foundational change in the economics and feasibility of developing next-generation AI.

Navigating the Inherent Challenges and Risks

Enhanced Natural Language Processing (NLP) is a key expectation, with QLMs poised to excel in tasks like sentiment analysis, language translation, and deep context-aware understanding by uncovering subtle, nonlocal linguistic patterns that are exceptionally difficult for classical models to capture. Furthermore, this development could inspire entirely novel AI architectures that require significantly fewer parameters to solve complex problems, contributing to a future of more sustainable and efficient AI. The architectural evolution inherent in quantum-AI is considered by some to be as significant as the adaptation of GPUs that fueled the deep learning revolution, promising orders of magnitude improvements in performance. Despite this immense promise, the rise of QLMs is accompanied by significant challenges and concerns. Quantum computers remain in their nascent stages, and designing algorithms that fully leverage their capabilities for complex NLP tasks is an ongoing area of intensive research. The high cost and limited accessibility of quantum systems will likely restrict widespread adoption in the short term. Furthermore, as AI becomes more powerful, ethical concerns regarding employment displacement, data privacy, and machine autonomy will require careful consideration and proactive governance. The broader development of fault-tolerant quantum computers also poses a “quantum threat” to current encryption standards, necessitating an urgent global transition to quantum-resilient cybersecurity protocols to protect digital infrastructure.

The Road Ahead: Future Trajectory and Long-Term Vision

Projections for a Quantum-Powered Future

The integration of quantum computing with language models has charted a course toward both pragmatic near-term advancements and transformative long-term capabilities. In the coming one to five years, progress will largely be driven by hybrid quantum-classical approaches on NISQ devices. The focus will remain on performing small-scale NLP tasks, such as topic classification and sentiment analysis, and exploring quantum-enhanced training methods to optimize specific layers or parameters within larger classical models. The long-term vision, extending beyond five years, hinges on the development of more robust, fault-tolerant quantum computers (FTQC). The eventual arrival of FTQC will overcome current hardware limitations, enabling the implementation of fully quantum algorithms known to provide exponential speedups for core LLM operations involving large-scale linear algebra, optimization, and sampling. Future generations of QLMs are expected to evolve beyond hybrid models into fully quantum architectures capable of processing information in high-dimensional quantum spaces. This could lead to a far deeper comprehension of language and improved semantic representation, all while being significantly more sustainable. Experts have offered tentative timelines, with early glimpses of quantum advantage anticipated in the coming year, significant progress in QNLP expected within five to ten years, and the reality of truly fault-tolerant quantum computers projected for 2030, with widespread industry adoption following throughout the 2030s.

Charting the Course of a Revolution

The breakthrough of quantum language models achieving generative performance on real hardware marked the dawn of a quantum AI revolution, heralding an era of more powerful, efficient, and interpretable artificial intelligence systems. This achievement set in motion a series of critical next steps for the industry. Key areas that demanded immediate and sustained focus included continued advancements in quantum hardware, particularly improvements in qubit stability, coherence, and count. The refinement of hybrid architectures and the development of robust, scalable quantum machine learning algorithms became essential research priorities. Furthermore, rigorous performance benchmarking against state-of-the-art classical models was established as a critical practice to validate the real-world utility of QLMs beyond academic proofs of concept. Early commercial applications, such as private betas from pioneering companies like SECQAI, signaled the initial stages of market readiness and provided valuable feedback for iterating on the technology. This foundational work established a clear trajectory, transforming a theoretical possibility into an engineered reality and initiating the long and complex journey toward fully realizing the profound potential of quantum artificial intelligence.