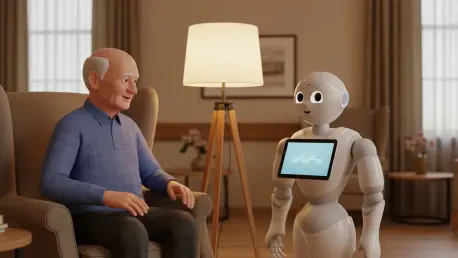

The next frontier in artificial intelligence is not about processing power or task efficiency, but about the nuanced, intricate art of listening, a shift that promises to transform robots from sterile tools into supportive, empathetic companions. Pioneering this evolution is Sooyeon Jeong, an assistant professor at Purdue University, whose research lab is dedicated to moving beyond the functional limitations of today’s AI. Her work aims to infuse robotic systems with the social and emotional intelligence necessary to forge genuine connections with humans, drawing inspiration from the beloved androids of fiction to create real-world counterparts. The ultimate objective is to develop nonfictional robots capable of providing tangible, positive impacts on human well-being across diverse settings, from healthcare to education, by making their interactions feel natural and supportive rather than purely transactional.

A New Philosophy for Human-Robot Interaction

Designing for Meaningful Connection

The foundational principle guiding this advanced research is a human-centric design philosophy that shifts the focus from a robot’s technical capabilities to the quality of the human-robot interaction itself. This approach involves engineering sophisticated software that can accurately perceive and appropriately respond to the complex tapestry of human social and emotional cues. The work is explicitly application-driven, having already contributed to projects aiding a wide range of populations, including cancer patients, hospitalized children, and geriatric adults. A significant consensus emerging from these efforts is that for robots to succeed in supportive roles within healthcare or therapy, they must first establish a genuine rapport. This requires a level of emotional intelligence far exceeding the command-response protocols of current consumer-grade AI, making the development of these nuanced abilities a top priority for creating truly collaborative partnerships between humans and machines.

This research highlights a crucial distinction between functional AI and socially intelligent AI, a gap that current commercial systems are ill-equipped to bridge. Mainstream voice assistants operate on a discrete, turn-based model that is effective for executing simple commands but fails to replicate the fluid, dynamic nature of human conversation and support. The goal is to evolve beyond this rigid structure toward a more collaborative partnership where the robot can understand context, anticipate needs, and adapt its behavior in real-time. This requires a paradigm shift from programming for task completion to designing for long-term, meaningful relationships. Achieving this level of sophistication means creating AI that can build trust and make users feel genuinely comfortable, a prerequisite for any effective application in sensitive fields where emotional connection is paramount to success. This evolution is not just an upgrade; it represents a fundamental re-imagining of what a robot can and should be.

Case Study: The Robot Study Buddy

A compelling demonstration of this philosophy is the “robot study buddy” project, conceived to address the persistent challenges students face with productivity and maintaining focus. While human study groups offer valuable peer accountability, they can be difficult to coordinate or may trigger social anxiety for some individuals. Meanwhile, existing technological solutions, such as productivity applications that block distracting websites, are primarily prohibitory and lack the supportive, encouraging element that a real peer can provide. To address this gap, Jeong’s research team developed a unique robotic companion designed to serve as both a motivator and a partner in the learning process. The robot was created to fill a specific niche by offering the benefits of accountability without the social pressures of a human group, providing a novel tool for academic support that is both accessible and encouraging.

The experiment was meticulously designed to explore several different interaction strategies to determine the most effective methods for motivation and support. In one condition, the robot functioned as a silent partner, simply working on its own task alongside the human participant to foster a sense of parallel productivity and shared effort. A second strategy positioned the robot as a taskmaster, periodically issuing reminders of the student’s self-stated goals, such as, “Remember! You said you wanted to complete the exam study guide by 2 p.m.” The third and most socially complex iteration involved the robot providing active emotional support, acting much like a personal trainer or a coach. It would offer encouraging phrases like, “You’re doing a great job! We can do this together!” and suggest beneficial actions such as taking short breaks to stretch and refocus, thereby testing a more interactive and empathetic approach.

The Science of Empathetic Listening

Overcoming the Machine Barrier

A central challenge in Jeong’s research is the creation of robots that can function as effective “active listeners,” a task that requires overcoming the inherent social barrier that often makes people reluctant to confide in a machine. The objective is to design a robot that feels less like a household appliance and more like a trusted confidant, akin to an empathetic friend or even a beloved pet. Achieving this requires a significant departure from the discrete, turn-based interaction models that characterize commercial voice assistants. Instead of simply processing and responding to direct queries, the robot must learn to engage in a fluid, continuous conversational flow that feels natural and collaborative. This shift is fundamental to building the trust necessary for a user to feel comfortable sharing personal thoughts and feelings, which in turn allows the AI to provide more meaningful and personalized support.

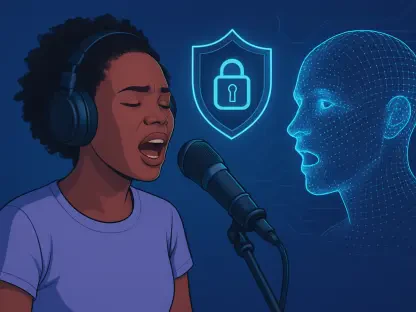

This advanced form of interaction hinges on the robot’s ability to not only process linguistic data but also to interpret the subtle, non-verbal signals that constitute a large part of human communication. For a person to feel truly heard, the listener must demonstrate engagement and understanding through more than just words. This is where the limitations of current AI become most apparent; their inability to perceive and react to cues like tone of voice, facial expressions, and body language prevents them from establishing a deeper connection. The research, therefore, focuses on developing multi-modal systems that can synthesize information from various sources—audio, visual, and textual—to form a more holistic understanding of the user’s emotional and cognitive state. This capability is the cornerstone of creating robots that can participate in conversations in a way that is not just reactive but genuinely interactive and empathetic.

The Power of Backchannels

A crucial component for enabling robots to become active listeners is the concept of “backchannels,” which are the subtle, often non-verbal, cues that listeners provide during a conversation to signal engagement and understanding. These include actions like nodding, maintaining eye contact, and uttering affirmations such as “right,” “uh-huh,” or “I see.” In human dialogue, these signals are fundamental to building trust and rapport, as they assure the speaker that they are being heard and encourage them to continue sharing. Without effective backchannels, a conversation can feel interrogative or one-sided, undermining the potential for a supportive connection. Consequently, programming a robot to generate appropriate backchannels is a staggeringly complex but essential technical challenge for developing socially adept AI.

To master this skill, the AI must do more than just process the literal meaning of words; it must analyze the emotional and contextual undercurrents of the conversation in real-time. To achieve this, Jeong and her team are leveraging large language models and training them on vast datasets of recorded human conversations. By analyzing subtle shifts in rhythm, pitch, tone, and word choice, the AI learns to identify a speaker’s emotional state and to generate backchannels that are both timed correctly and emotionally congruent with the dialogue. The research has consistently shown that the more a robot can exhibit these empathetic listening behaviors, the more comfortable a person feels, leading to greater self-disclosure. This increased openness allows the robot to gather the information needed to provide more effective, personalized support, a capability that is non-negotiable for success in sensitive fields like therapy and healthcare.

A Future Forged in Empathy

The culmination of this research painted a clear picture of the next evolutionary step for robotics. It became evident that for robots to transition from mere functional tools to integrated social companions, the development of sophisticated social and emotional intelligence was not just beneficial but absolutely essential. The work on projects like the robot study buddy established that effective support had to be deeply personalized and context-aware, adapting in real time to a user’s unique personality and fluctuating needs. Furthermore, the deep dive into active listening and backchannels provided a technical foundation for creating machines that could build genuine rapport, moving beyond simple data processing to achieve a form of artificial empathy. The overarching trend identified was a definitive shift away from programming for task completion and toward designing for meaningful, positive, and long-term human-robot relationships in the real world.