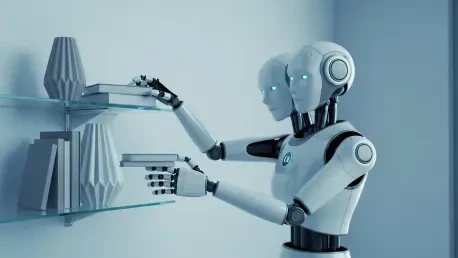

The complex dance of human hands performing everyday tasks, from folding laundry to preparing a meal, has long represented a formidable barrier for robotic assistants, but a new methodology is teaching machines to master this intricate coordination through simple observation. Researchers at the Universidad Carlos III de Madrid (UC3M) have developed a novel approach centered on the ADAM (Autonomous Domestic Ambidextrous Manipulator) robot, designed to learn and execute complex, two-armed tasks by watching human demonstrations. This breakthrough aims to create more intuitive and easily teachable service robots for domestic environments. The ultimate vision is to deploy these robots to assist individuals, particularly the elderly, with daily chores such as setting the table, tidying living spaces, and fetching requested items like medication or a glass of water, thereby significantly enhancing their autonomy and quality of life in their own homes.

A New Paradigm for Dual-Arm Coordination

One of the most persistent challenges in modern robotics has been programming two robotic arms to work together in a fluid, safe, and coordinated manner. Traditionally, this required treating the two arms as a single, centrally controlled unit, a method that is computationally expensive and notoriously difficult to program. The research, featured at the 2025 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), introduces a revolutionary two-stage process that circumvents this complexity. In the first stage, each of the robot’s arms is taught to perform its part of a task independently. This initial learning phase utilizes “imitation learning,” a paradigm where the robot acquires skills by observing and replicating human actions. A human operator can physically guide the robot’s arm through the desired motions or simply perform the action for the robot to record and learn, making the teaching process remarkably intuitive and accessible.

The true innovation of this methodology unfolds in the second stage, where the two independently trained arms are enabled to communicate and coordinate with each other seamlessly. This is achieved through a sophisticated mathematical framework known as Gaussian Belief Propagation, which functions as a continuous, invisible dialogue between the arms. Through this system, the arms share critical information about their position, trajectory, and intent in real-time. This constant stream of intercommunication allows them to dynamically adjust their movements on the fly, effectively avoiding collisions with each other and with other obstacles in their shared workspace. A significant advantage of this approach is that the coordination occurs without requiring the robot to pause its actions to recalculate paths, resulting in remarkably fluid, efficient, and natural-looking motions. The efficacy of combining imitation learning with this communication framework has been successfully validated in both computer simulations and with real-world robotic platforms.

From Rigid Repetition to Intelligent Adaptation

This new methodology represents a substantial leap forward from the limitations of both traditional robotic programming and earlier forms of imitation learning. Historically, programming a robot to perform even a simple task required engineers to write thousands of lines of complex, meticulous code that defined every single movement, contingency, and potential interaction. This process was not only incredibly time-consuming and laborious but also resulted in rigid, inflexible behaviors that could not adapt to slight changes in the environment. While imitation learning offered a more intuitive alternative by allowing robots to learn from demonstration, early implementations suffered from a critical flaw: they could only mechanically repeat a learned movement with exact precision. If an object’s position was altered—for instance, if a bottle was moved a few inches from its original spot—a robot based on simple imitation would repeat the original gesture exactly and fail the task.

The UC3M researchers have transcended this fundamental limitation by engineering a more advanced and adaptable form of learning. Their system conceptualizes learned movements as being elastic, much like a “rubber band” that can stretch and deform while remaining connected to its anchor points. When a target object’s position changes, the robot’s learned trajectory deforms smoothly and intelligently to reach the new location. Crucially, this adaptation preserves the fundamental essence and constraints of the original action. For example, when picking up a bottle and pouring its contents, the robot understands the implicit rule that it must keep the bottle upright during transport to avoid spilling. Even as it adjusts its path to a new target, this constraint is maintained. This focus on adaptation and contextual understanding, rather than mere mechanical repetition, is a cornerstone of their research and marks a pivotal step in evolving robots from simple movement recorders into authentic collaborative partners.

The Architecture of an Autonomous Assistant

The operational architecture of the ADAM robot is logically structured into a three-phase cycle: perception, reasoning, and action. The perception phase is dedicated to gathering comprehensive data about the environment through an array of advanced sensors. ADAM is equipped with 2D and 3D laser sensors, which it uses to measure distances with high precision, detect and map static and dynamic obstacles, and accurately locate objects within its workspace. Complementing these are RGB cameras integrated with depth-sensing capabilities. This fusion of sensory inputs allows the robot to generate detailed and continuously updated three-dimensional models of its surroundings, providing it with the rich environmental awareness necessary to perform complex tasks safely and effectively in cluttered, real-world settings like a home kitchen or living room. This robust perception is the foundation upon which all subsequent decision-making and physical actions are built.

Following perception is the reasoning phase, where the robot processes the vast amount of sensory data to extract relevant, actionable information. This is where a significant and ongoing research challenge lies: moving beyond simply “seeing” objects to truly “understanding” their function, purpose, and contextual relevance to the user’s current needs and goals. While traditional approaches often relied on static, pre-programmed common-sense databases, the UC3M team is actively working to incorporate more dynamic artificial intelligence. A key focus is the integration of generative models, which will empower the robot to adapt its behavior based on the specific, unfolding situation rather than on generalized, inflexible rules. Finally, the action phase is when the robot, having perceived its environment and reasoned about the best course of action, executes a decision. This could involve moving its mobile base to a new location, coordinating its ambidextrous arms to manipulate an object, or performing a specific user-requested task.

Envisioning a Future of Home Assistance

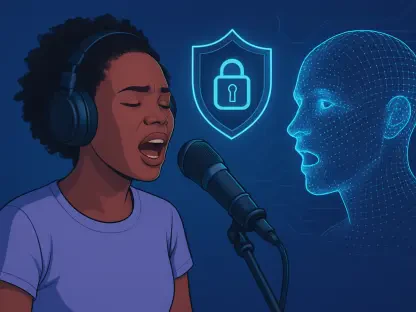

From a practical and societal perspective, the project acknowledged both the current state and the significant future potential of assistive robotics. At present, ADAM existed as an advanced experimental platform with a substantial cost, estimated to be between 80,000 and 100,000 euros, limiting its immediate widespread adoption. However, the underlying technology was considered mature, and researchers projected that within a 10 to 15-year timeframe, robots with these advanced capabilities could become a common presence in homes at a significantly more affordable price point. This work was framed within the broader context of a major global demographic shift: population aging. As the number of elderly individuals in society continued to increase and the number of available human caregivers declined, technology was identified as a critical component to fill the impending gap. Assistive robots, therefore, emerged as a key tool and a necessary technological solution to help improve the quality of life, independence, and autonomy of people in their later years.