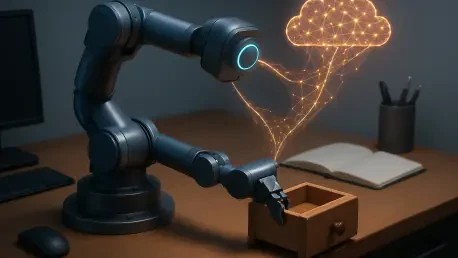

The painstaking process of teaching a robot a new skill has long been a bottleneck in artificial intelligence, requiring thousands of hours of direct human supervision for even the simplest of tasks. This intensive reliance on manual feedback has historically limited the pace and scale of robotics development. Recent advancements from Stanford University, however, introduce a groundbreaking approach that automates this critical step, using a sophisticated AI ecosystem to serve as a tireless, automated tutor for its robotic counterparts. This work directly confronts the challenge of human-intensive labor by investigating whether an AI model can effectively evaluate a robot’s performance, thereby paving the way for more autonomous and efficient learning.

Introducing RoboReward: An AI Ecosystem for Autonomous Robot Training

At the heart of this research is RoboReward, a novel, integrated ecosystem designed to automate the training and evaluation of robotic systems. The project was conceived to dismantle the core challenge in modern robotics: the costly and labor-intensive dependence on human intervention. Traditionally, training a robot involves a person watching countless attempts and providing simple “success” or “failure” labels. RoboReward aims to replace this manual process entirely.

The central hypothesis driving this work is whether a specialized vision-language model (VLM) can effectively function as an automated “reward model.” Instead of a human, this AI would observe a robot’s actions via video, understand the assigned task from a text description, and then generate a nuanced score indicating how well the robot performed. This would not only accelerate the training cycle but also introduce a level of consistency and scalability previously unattainable, freeing human researchers to focus on higher-level challenges.

The High Cost of Human Supervision in Robotics

The background for this innovation lies in the conventional methods of robot training, which lean heavily on a machine learning paradigm known as reinforcement learning. In this model, a robot learns through trial and error, gradually improving its strategy based on feedback, or “rewards,” it receives for its actions. While powerful, this method’s progress has been severely hampered by its primary bottleneck: the need for human supervision.

This process is notoriously inefficient and expensive. It requires human operators to manually review thousands, or even tens of thousands, of robot trials to provide the necessary feedback signals. This creates a significant barrier to entry for smaller research labs and slows down progress across the field. Consequently, the development of a scalable, efficient, and accessible pathway for creating capable robots is not just an academic exercise but a crucial step toward realizing the potential of autonomous systems in the real world.

Research Methodology, Findings, and Implications

Methodology

To build an effective AI tutor, the researchers first constructed a unique and comprehensive training resource: the RoboReward dataset. This collection of real-robot videos was meticulously curated to go beyond simple demonstrations of success. It features a rich variety of scenarios, including complete failures and near-misses, which are essential for teaching a model to discern the subtle differences between a perfect execution and a partial one. Each video is paired with a clear task description in natural language and a corresponding progress score, creating a powerful learning tool.

Leveraging this specialized dataset, the team trained two new, general-purpose vision-language models, named RoboReward 4B and 8B. These models were specifically engineered to process video input and generate high-quality reward signals that could directly guide a robot’s learning process. To ensure their work could be rigorously tested and compared against others, they also developed RoboRewardBench, a human-verified evaluation suite. This benchmark provides a standardized framework for measuring the accuracy of any automated reward model, creating a fair and transparent playing field for future research.

Findings

The results of the experiments were compelling. The key finding was that robots trained using the automated reward signals generated by the RoboReward models successfully acquired new skills with remarkable speed and reliability. This demonstrated that an AI-driven feedback loop could be a viable and effective substitute for continuous human oversight in real-world training scenarios, such as teaching a robot to open a drawer or pick and place an object.

Furthermore, when tested on the RoboRewardBench benchmark, the specialized RoboReward 8B and 4B models significantly outperformed larger, more generalized VLMs that are considered state-of-the-art. This outcome highlights that a smaller model trained on targeted, high-quality, domain-specific data can achieve superior performance on specialized tasks. The RoboReward models substantially closed the performance gap between fully automated systems and the gold standard of direct, human-provided rewards, marking a significant step toward truly autonomous robot learning.

Implications

In a move to catalyze progress across the field, the research team has made the entire RoboReward project—including the dataset, models, and benchmark—open-source. This contribution provides the research community with a complete and powerful toolset, lowering the barrier to entry for developing and testing new reward models. The open availability of these resources is expected to foster collaboration and accelerate the development of a new generation of AI for robotics.

This work provides a clear and practical framework for automating some of the most challenging parts of the robot training pipeline. By proving the viability of automated reward generation, the research paves the way for more scalable and efficient development cycles. Ultimately, these advancements are poised to make robotics research more accessible, enabling more teams to contribute to the creation of versatile and intelligent autonomous systems.

Reflection and Future Directions

Reflection

One of the most significant takeaways from this research is the validation that smaller models can outperform their much larger counterparts when trained on high-quality, domain-specific data. This finding challenges the prevailing notion that bigger is always better in the world of AI models, suggesting a more nuanced approach where tailored datasets can lead to more efficient and effective solutions for specific problem domains.

The success of the methodology in creating a viable substitute for continuous human feedback serves as a powerful validation of the project’s core hypothesis. The ability of the RoboReward models to provide accurate and useful reward signals confirms that VLMs can indeed take on the role of a human labeler. By open-sourcing the complete toolset, the project not only shares its success but also establishes a foundation for collaborative progress and standardization in evaluating robotic learning systems.

Future Directions

Building on this successful foundation, the immediate goal is to extend the capabilities of reward modeling to handle longer-horizon and more complex, multi-step tasks that better reflect real-world challenges. This involves teaching the models to understand sequences of actions and intermediate goals, moving beyond the single-step successes demonstrated so far. Improving the reliability and calibration of these automated reward models is another critical priority to ensure they can be deployed robustly and safely.

The researchers also anticipate that this work will motivate broader improvements in the field of large vision-language models. The challenges highlighted by RoboReward are expected to push the development of VLMs toward a deeper capacity for physical reasoning and a more refined understanding of fine-grained spatial and temporal details. Such advancements are crucial for accurately interpreting and evaluating complex physical interactions, benefiting not only robotics but also the wider field of artificial intelligence.

Paving the Way for More Capable and Autonomous Robots

This research’s primary contribution is the development of a complete, open-source ecosystem that successfully automates a critical component of robot training. By providing the dataset, models, and benchmark, this work equips the entire community with the tools needed to build upon its success. The findings offer a compelling demonstration of a more efficient and scalable method for teaching robots new skills, directly addressing one of the most significant hurdles in the field.

Ultimately, this study plays a crucial role in advancing the field toward a future of more versatile, autonomous, and accessible robotics. By automating the tedious process of supervision, it frees human ingenuity to tackle more complex problems, accelerating the timeline for developing robots that can operate safely and effectively alongside people in dynamic, unstructured environments. The foundation laid by RoboReward represents a significant and practical step forward in the quest for truly intelligent machines.