The rapid evolution of artificial intelligence (AI) has brought about significant advancements in various fields, including surveillance systems. However, the rise of deepfake technology poses a serious threat to the integrity and reliability of security footage. Deepfakes, which are synthetically generated media that appear remarkably real, can deceive the human eye and undermine trust in modern surveillance technologies. This article explores the risks associated with deepfakes, the impact on surveillance systems, and the innovative AI solutions being developed to mitigate these threats.

The Threat to Surveillance Systems

Deepfakes have the potential to manipulate or fabricate security footage, posing severe risks to the reliability of surveillance systems. One of the primary concerns is the ability of deepfakes to create manipulated evidence. By forging security footage, deepfakes can create false narratives, exonerate the guilty, or implicate the innocent. Such scenarios present significant legal and ethical liabilities for criminal investigations and courtroom proceedings, where the authenticity of evidence is paramount.

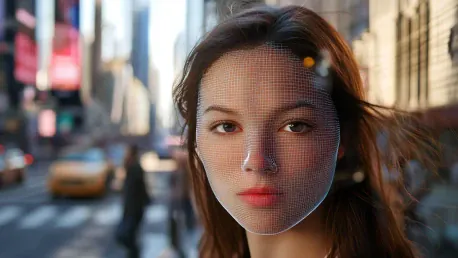

Additionally, deepfakes can successfully bypass facial recognition systems. Deepfake-generated faces can deceive these systems, allowing unauthorized access to secure areas or systems. This not only compromises physical security but also poses a threat to sensitive information and assets. Moreover, deepfakes can be used in disinformation campaigns, creating convincing but false footage that can spread misinformation and erode public trust in surveillance technology.

Furthermore, generative AI can exploit machine learning models in surveillance systems, leading to false positives or negatives in threat assessments. This can result in either unnecessary alarms or missed threats, both of which undermine the effectiveness of surveillance systems. The combined risks underscore the urgent need for robust mitigation strategies to safeguard surveillance systems against deepfake threats.

AI-Based Detection Tools

To combat the growing threat of deepfakes, AI-based detection tools are being developed to identify manipulated media. These tools analyze inconsistencies in lighting, shadows, or pixel patterns that are often present in deepfake content. For instance, Microsoft’s Video Authenticator and Deepware Scanner have made significant strides in detecting deepfakes by leveraging advanced AI algorithms.

These detection tools work by examining the subtle details that are often overlooked by the human eye. For example, inconsistencies in the way light reflects off a person’s face or the unnatural movement of facial features can be indicators of deepfake manipulation. By training AI models on large datasets of both genuine and fake media, these tools can learn to identify patterns and anomalies that suggest tampering.

While AI-based detection tools serve as a crucial component of the defense against deepfakes, they are not foolproof. As deepfake technology continues to evolve, so too must the detection methods. Continuous research and development are necessary to stay ahead of the increasingly sophisticated techniques used to create deepfakes. Collaboration between AI researchers, cybersecurity experts, and the tech industry is essential to ensure the effectiveness of these detection tools.

Blockchain for Data Integrity

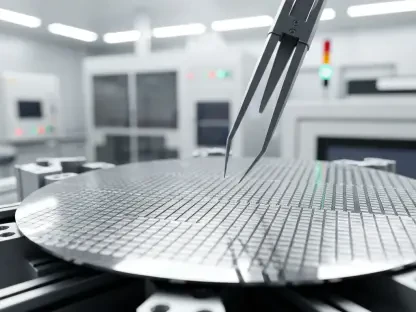

Blockchain technology offers a promising solution for preserving the integrity of security footage. By recording footage in a tamper-proof ledger, blockchain can ensure that any alterations to the data are easily detectable. Cryptographic hashes embedded in the footage can serve as a digital fingerprint, allowing for the verification of the footage’s authenticity.

The use of blockchain in surveillance systems can provide a transparent and immutable record of security footage. This can be particularly valuable in legal contexts, where the chain of custody and the integrity of evidence are critical. Leveraging blockchain enables organizations to create a secure and verifiable record of surveillance data, reducing the risk of tampering and manipulation.

However, the implementation of blockchain technology in surveillance systems is not without challenges. The cost and complexity of integrating blockchain with existing infrastructure can be significant, particularly for smaller organizations with limited resources. Additionally, the scalability of blockchain solutions must be addressed to ensure they can handle the large volumes of data generated by surveillance systems. Despite these challenges, the potential benefits of blockchain for data integrity make it a valuable tool in the fight against deepfakes.

Watermarking and Metadata Verification

The rapid evolution of artificial intelligence (AI) has led to significant advancements across various fields, including surveillance systems. However, this progress has also ushered in new challenges, particularly the rise of deepfake technology. Deepfakes are synthetically generated media that look incredibly real, posing a serious threat to the integrity and trustworthiness of security footage. These false images and videos can deceive even the most discerning eyes, undermining confidence in modern surveillance technologies.

This article delves into the risks posed by deepfakes, highlighting how they can compromise the effectiveness of surveillance systems. The presence of deepfake content can lead to false alarms, misidentifications, and a general distrust of video evidence. To counter these threats, researchers and tech companies are developing advanced AI solutions aimed at detecting and mitigating the impact of deepfakes. These innovative approaches focus on enhancing the robustness of surveillance systems, ensuring that they remain reliable in the face of increasingly sophisticated synthetic media.