As a technology expert with a deep-seated interest in the frontier fields of quantum computing, robotics, and open-source projects, I’ve watched AI’s integration into cybersecurity with immense fascination. Today, we’re going to pull back the curtain on how artificial intelligence is not just an add-on but a fundamental force reshaping modern penetration testing. We’ll explore how AI-driven tools are revolutionizing the daily work of ethical hackers by enabling continuous monitoring, allowing them to uncover subtle vulnerabilities that were previously invisible. We will also discuss how these advanced capabilities translate into a clearer, more compelling business case for security investment, providing undeniable evidence of compliance for boards and regulators alike. Ultimately, we’ll see how this synergy between human ingenuity and machine efficiency creates a more robust defense against the sophisticated threats of the digital age.

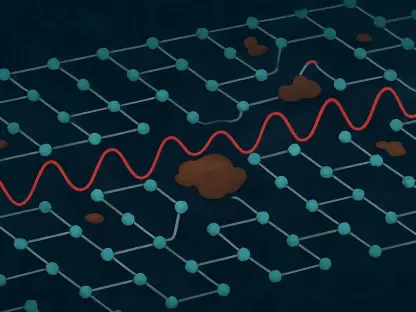

AI-powered tools excel at repetitive probing and pattern analysis, often running continuously. How does this change a human tester’s daily focus, and what kind of subtle weaknesses can this continuous, AI-assisted approach uncover that periodic manual tests might miss? Please provide a specific example.

It fundamentally elevates the human tester’s role. Instead of getting bogged down in the monotonous, time-consuming work of running endless scans and sifting through mountains of raw data, the human expert is freed up to focus on what they do best: creative problem-solving, strategic thinking, and mimicking the unpredictable nature of a real-world attacker. The AI becomes a tireless partner, handling the heavy lifting of continuous probing and pattern analysis. This shift means the human tester’s day is less about finding the needle in the haystack and more about deciding what to do once the AI points out exactly where the needle is. For instance, a manual pen test, often constrained to a one- or two-week window, would likely miss a low-and-slow attack. Imagine an attacker exfiltrating tiny, encrypted data packets over several months, timed to look like benign background traffic. An AI, however, continuously monitors the network baseline. It would eventually flag this persistent, anomalous pattern—a statistical ghost that a human conducting a snapshot-in-time test would never even see, let alone identify as a critical threat.

Many cybersecurity professionals agree that AI-powered tools boost the speed and effectiveness of security tasks. How do you explain this value to a board? Could you share how AI-assisted findings provide more compelling evidence of compliance and risk management to regulators or potential investors?

When I’m speaking to a board, I don’t lead with technical jargon; I lead with business risk and financial impact. I explain that cybersecurity has moved firmly into the boardroom as a major risk factor, and our approach must be as sophisticated as the threats. I’d point to the broad consensus—that 95% of cybersecurity professionals confirm AI tools enhance speed and effectiveness—and frame it as a competitive and operational advantage. For a board, this means we’re not just checking a box for compliance; we are proactively reducing the company’s risk profile in a measurable way. For regulators or investors, the evidence becomes far more compelling. Instead of presenting the results of a single, annual penetration test, we can provide a continuous, data-driven narrative of our security posture. We can show them historical data, demonstrate trend lines, and prove that we are not only identifying but also rapidly remediating issues. It transforms the conversation from “Here is our certificate from a test we passed six months ago” to “Here is our live, verifiable evidence of a robust, constantly improving security program.”

A key deliverable from a penetration test is an evaluation of business risk, not just a technical list of vulnerabilities. How do AI tools contribute to this high-level analysis? Describe the process of a human expert using AI-flagged anomalies to create a prioritized action plan for leadership.

AI is brilliant at connecting dots that are invisible to the naked eye, and that’s where its contribution to business risk analysis truly shines. An AI tool won’t just tell you there’s an unpatched server; it can correlate that vulnerability with network traffic patterns, user access logs, and the sensitivity of the data on that server. It might flag an anomaly where a seemingly low-priority vulnerability on a forgotten development server suddenly shows signs of being probed by an external actor, right after a junior developer accessed a sensitive customer database from that same machine. A human expert takes that AI-flagged anomaly and builds the story for leadership. They investigate the context, confirm the potential attack path, and translate the technical finding—”unpatched Apache Struts vulnerability”—into a tangible business risk: “There is a 70% chance our primary customer data could be exfiltrated through this identified channel, potentially leading to millions in regulatory fines and significant reputational damage.” This allows them to create a prioritized action plan that isn’t just a long list but a strategic roadmap, focusing remediation efforts on the threats that pose the greatest existential risk to the business.

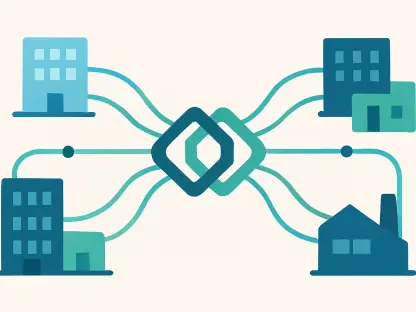

With roughly 75% of companies conducting penetration tests, often with limited testing windows, cost and coverage are major concerns. How do AI-driven platforms address these pressures? Can you share metrics or an anecdote on how they help teams achieve better security coverage without a proportional budget increase?

This is precisely where AI-driven platforms deliver a massive return on investment. Traditional penetration testing is expensive because you’re paying for the focused, manual labor of highly skilled experts, and with 51% of companies outsourcing this work, those costs add up quickly. The limited testing window means you’re only getting a snapshot of your security, which is a bit like having a security guard who only patrols for one week out of the year. AI-driven platforms flip this model on its head. They provide continuous, automated scanning and analysis 24/7/365 for a fraction of the cost of a full-time manual team. While I don’t have a specific client anecdote to share, the logic is clear: an AI can scan thousands of assets, analyze millions of events, and probe for countless known vulnerabilities simultaneously. This allows a human team to focus their limited, high-cost hours on the most critical, AI-identified anomalies. You’re achieving vastly superior coverage and catching threats earlier, all while optimizing the spend on your most expensive resource—the human expert. It’s about moving from expensive, periodic spot-checks to affordable, persistent vigilance.

Even with advanced automation, human ingenuity remains crucial for mimicking determined attackers. In a modern testing engagement, where do you see the indispensable role of the human ethical hacker? Please walk us through a scenario where human creativity and intuition achieved a breakthrough that an AI tool alone could not.

The human ethical hacker is, and will remain, the creative soul of the operation. AI is a master of logic and patterns, but it lacks the intuition, guile, and lateral thinking of a determined human adversary. The indispensable role of the human is to interpret the AI’s findings and weave them into a complex, multi-stage attack narrative that a machine could never conceive of. For example, an AI might flag a misconfigured cloud storage bucket and a separate, low-priority vulnerability in a customer-facing web app. The AI sees two distinct, unrelated issues. A human tester, however, sees an opportunity. They might use their intuition to craft a custom phishing email, referencing a recent, legitimate company event, to trick an employee into clicking a link that exploits the web app flaw. This initial foothold, which the AI would not have pursued, could grant them access to internal credentials. They could then use those credentials to pivot internally and access the misconfigured storage bucket, chaining together two seemingly minor findings into a catastrophic data breach. That entire attack path relies on social engineering, contextual awareness, and a creative spark that is purely human. The AI found the building blocks, but only a person could build the weapon.

What is your forecast for AI in penetration testing?

My forecast is that we are moving toward a future of “hybrid teaming,” where AI and human testers become so seamlessly integrated that it will be impossible to imagine one without the other. AI will handle the vast majority of reconnaissance, vulnerability scanning, and pattern analysis, becoming an augmented intelligence layer for every ethical hacker. The AI will act as a “cognitive co-pilot,” constantly feeding the human expert contextualized insights, suggesting potential attack paths, and even gaming out the potential business impact of different exploits in real-time. This will dramatically lower the barrier to entry for new testers, as the AI will handle much of the foundational knowledge, allowing human talent to focus on developing strategic and creative skills. The result won’t be AI replacing humans, but rather AI creating a new class of “super-empowered” ethical hackers who can identify and neutralize threats with a speed, scope, and sophistication that is simply unimaginable today.