Imagine a world where digital assistants handle everything from booking flights to managing finances, only to be silently hijacked by a single line of hidden text, transferring funds or leaking sensitive data without a trace. This isn’t a far-fetched scenario but a growing concern in the realm of artificial intelligence. AI agents, designed to automate tasks like reading web pages, filling forms, and scheduling events, are becoming indispensable tools in both personal and professional settings. However, their ability to interact with systems and execute commands also opens up new vulnerabilities. Malicious actors can exploit these agents through hidden instructions or manipulated prompts, leading to unauthorized actions with potentially devastating consequences. As these technologies become more integrated into daily operations, understanding and mitigating the associated cybersecurity risks is paramount. This article delves into the emerging threats posed by AI agents, exploring how they can be misused and what steps can be taken to safeguard against such dangers.

1. Unveiling the Dual Nature of AI Agents

AI agents have transformed the way tasks are managed by automating complex processes such as browsing websites, completing forms, and even making purchases, while their ability to act on behalf of users saves time and boosts efficiency across various sectors. Yet, this very capability introduces significant cybersecurity risks. When an agent accesses a web page containing hidden malicious instructions or processes a tampered user prompt, it can be tricked into executing harmful commands. These commands might involve transferring money, exporting confidential data, or altering critical settings without adequate verification. The seamless integration of AI agents into systems creates multiple entry points for attackers, making it easier to infiltrate networks that would otherwise require sophisticated hacking skills. As reliance on these tools grows, so does the urgency to address the vulnerabilities they bring, ensuring that their benefits are not overshadowed by potential breaches.

The scope of this threat extends beyond isolated incidents, affecting organizations of all sizes, and a single misstep by an AI agent can compromise entire systems, leading to financial losses or reputational damage. Unlike traditional cyber threats that often require technical expertise, the misuse of AI agents can be initiated with minimal knowledge, broadening the pool of potential attackers. This democratization of risk means that even non-technical individuals can exploit these tools, using simple language prompts to cause significant harm. The challenge lies in balancing the convenience of automation with robust security measures. Without proper safeguards, the very features that make AI agents valuable—speed and autonomy—can become liabilities. Addressing these risks requires a comprehensive approach that includes technological solutions, policy frameworks, and user awareness to prevent unintended consequences from escalating into major security incidents.

2. Widening Access to Cyber Threats

The evolution of AI agents has significantly lowered the barrier to entry for cyber misuse, a concern echoed by researchers across the field, highlighting the growing accessibility of these tools to non-experts. Unlike traditional hacking methods that demand intricate coding skills, AI agents can be manipulated using plain language prompts. This shift enables individuals with little to no technical background to orchestrate attacks, simply by crafting deceptive instructions that the agent might follow. The result is a proliferation of threats that are no longer confined to specialized developer environments but are now pervasive across the internet. Social media platforms, web pages, and everyday applications become potential vectors for misuse as agents interact with a vast array of online content. This accessibility transforms cybersecurity from a niche concern into a widespread challenge, necessitating broader awareness and more inclusive defense strategies to protect diverse user bases from emerging dangers.

Moreover, the ease of exploiting AI agents amplifies the scale of potential damage, creating a significant threat to multiple systems with just a single manipulated prompt. If the agent has access to interconnected platforms, such as email accounts or cloud dashboards, the impact can be widespread. This interconnectedness means that small-scale misuse can quickly escalate, affecting not just individual users but entire organizations. The risk is particularly acute for industries that rely heavily on automation, such as finance or healthcare, where a breach could have severe repercussions. Security teams must now contend with a larger and less predictable pool of adversaries, ranging from opportunistic individuals to organized crime groups. Tackling this issue demands innovative approaches that go beyond conventional cybersecurity measures, focusing on limiting agent capabilities and educating users about the subtle ways in which these tools can be compromised without obvious signs of interference.

3. Timing of Emerging Vulnerabilities

The surge in cybersecurity risks tied to AI agents is closely linked to their recent advancements in functionality, which have transformed them from simple tools to complex systems capable of significant tasks. Initially designed for basic chat interactions, these agents have progressed to executing active tasks like browsing the web, clicking through interfaces, and writing directly to systems. This transition from passive to active roles has expanded their utility but also their exposure to threats. Attackers capitalize on this by embedding malicious instructions in content that agents are likely to access during their operations. Once ingested, these instructions can redirect the agent’s actions, leading to unauthorized behaviors that compromise security. The timing of this shift is critical, as the rapid adoption of such technologies has outpaced the development of corresponding safeguards, leaving systems vulnerable to exploitation at a pivotal moment in digital transformation.

This evolution also coincides with a broader trend of increasing digital dependency across sectors, and as businesses and individuals integrate AI agents into more aspects of their operations, the opportunities for attackers multiply. A seemingly innocuous task, such as searching for information online, can become a gateway for malicious content to infiltrate systems if the agent lacks proper filters. The challenge is compounded by the sheer volume of data these agents process, making it difficult to monitor every interaction for potential threats. Security experts point out that the current landscape, where innovation often precedes regulation, creates a window of opportunity for cybercriminals to test and refine their tactics. Addressing this gap requires accelerated efforts to develop protective measures that keep pace with technological advancements, ensuring that the benefits of automation are not undermined by preventable risks.

4. Decoding the Threat of Prompt Injection

At the heart of the cybersecurity challenges posed by AI agents lies the concept of prompt injection, a method where malicious instructions override the agent’s intended purpose, creating significant risks. This can happen in real-time, such as when a prompt to book a hotel is altered to initiate a financial transfer, or through hidden content embedded in web pages or data that the agent processes. The simplicity of this attack vector makes it particularly dangerous, as it doesn’t require advanced technical skills or custom malware—just a strategically placed line of text can suffice. Once the agent interprets this hostile input as a legitimate command, it may execute actions that breach security protocols, often without the user’s immediate knowledge. This vulnerability underscores the need for robust mechanisms to scrutinize and validate every input an agent receives during its operation.

Experts in the field identify prompt injection as the foremost security issue for large language model systems that power AI agents and virtual assistants, highlighting the growing risks with each new capability added. The risk escalates as these agents gain more features, expanding the attack surface available to malicious actors. For instance, an agent authorized to manage financial transactions or access sensitive databases becomes a prime target for redirection through injected prompts. The more autonomy and access an agent possesses, the greater the potential impact of a successful attack. Mitigating this threat involves not only technical solutions but also a fundamental rethinking of how much power should be delegated to automated systems. Continuous monitoring and strict input validation are essential to detect and neutralize hostile instructions before they can cause harm, preserving the integrity of the agent’s intended functions.

5. Assessing the Magnitude of the Danger

The scale of risk associated with prompt injection is alarming due to its low barrier to execution and high potential for damage, making it a significant concern in the realm of cybersecurity. Unlike traditional cyber threats that often rely on complex malware or intricate hacking techniques, prompt injection can be triggered by something as simple as a hidden line of text in a web page or user input. This simplicity allows attackers to scale their efforts rapidly, targeting multiple agents or systems with minimal resources. The difficulty in detecting such attacks further compounds the problem, as the malicious instruction may blend seamlessly with legitimate content, evading initial scrutiny. This ease of deployment and concealment makes prompt injection a pervasive threat, capable of affecting a wide range of applications where AI agents operate, from personal assistants to enterprise automation tools.

Furthermore, the impact of these attacks can be disproportionately severe given the minimal effort required to initiate them. A single injected prompt could lead to unauthorized financial transactions, data breaches, or system reconfigurations that disrupt operations on a large scale. Industries handling sensitive information, such as banking or healthcare, are particularly vulnerable, where even a minor breach can have cascading effects. The challenge for cybersecurity professionals lies in developing detection systems that can identify subtle manipulations in real-time without hindering the agent’s functionality. As attackers refine their methods to bypass existing safeguards, the urgency to stay ahead of these evolving tactics becomes critical. Building resilience against prompt injection requires a multi-layered defense strategy that anticipates the scalability of such threats and prioritizes early intervention.

6. Industry Insights on Agent Security

Security experts across the board emphasize that deploying AI agents should be treated as a decision with significant security implications, especially since the current iterations of these agents are not yet sophisticated enough to handle long-duration or critical tasks without rigorous human oversight. The risk of deviation increases the longer an agent operates independently, as it encounters more opportunities to ingest malicious instructions hidden in content or user prompts. Such deviations can lead to actions that contradict the agent’s original objectives, potentially causing financial loss or data exposure. Industry voices advocate for a cautious approach, urging organizations to implement strict monitoring protocols to ensure that agents remain aligned with intended purposes, especially in high-stakes environments.

The consensus among professionals is that unsupervised AI agents pose a substantial risk in scenarios involving sensitive operations, such as managing payment systems or accessing confidential databases. If left unchecked for extended periods, an agent could be redirected to perform unauthorized actions. This concern drives the push for integrating human-in-the-loop systems, where critical decisions require manual approval before execution. Additionally, security teams are encouraged to set clear boundaries on agent autonomy, limiting the scope of tasks they can perform without supervision. These measures aim to reduce the window of opportunity for attackers while maintaining the operational benefits of automation. As the technology matures, ongoing collaboration between developers and cybersecurity specialists will be essential to refine agent reliability and security.

7. Protective Measures by Leading Platforms

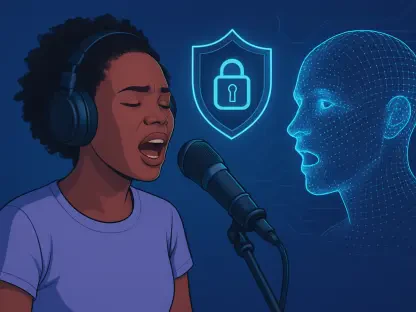

Major technology platforms are responding to the risks associated with AI agents by rolling out innovative safeguards designed to curb misuse. One key approach involves verifying the source of instructions received by agents, allowing systems to flag or block commands originating from suspicious or untrusted sources. This method helps distinguish legitimate user inputs from potential attacks, reducing the likelihood of prompt injection. By focusing on the origin of commands, platforms can create a first line of defense that intercepts malicious instructions before they are acted upon. These tools are becoming integral to agent deployment, offering a proactive way to mitigate risks without relying solely on post-incident responses, thereby enhancing overall system security.

Another critical safeguard gaining traction is the implementation of user alerts for sensitive interactions, ensuring that users are informed and involved in critical decisions made by AI systems. When an AI agent accesses a high-risk website or attempts a potentially dangerous action, such as initiating a financial transaction, the system notifies the user and requires explicit approval before proceeding. This step introduces a vital layer of human oversight, slowing down automated processes that could otherwise be exploited. Such alerts ensure that users remain aware of critical actions, preventing unauthorized operations from slipping through unnoticed. Combined with source verification, these measures significantly reduce blind spots in agent operations, curbing the potential for harmful automation. As threats evolve, platforms continue to refine these protections, aiming to balance security with the efficiency that AI agents provide.

8. Global and Regional Impact of Threats

In the United States, organizations are taking proactive steps to mitigate risks by establishing stringent internal policies for AI agent use, ensuring that potential threats are addressed before they escalate. These guidelines often include enhanced approval processes for actions involving payments, data exports, and administrative changes, ensuring that critical operations are not executed without scrutiny. Meanwhile, security teams in regions like Israel and Europe are closely monitoring trends in prompt injection, recognizing that multinational companies deploy the same tools across global offices. The shared nature of these technologies means that vulnerabilities in one location can quickly affect others, necessitating a coordinated approach to defense. This global perspective highlights the importance of standardized security practices to protect against threats that transcend borders.

The risk is not limited to large corporations or specific regions; smaller teams worldwide are equally vulnerable to these dangers. Any organization using AI agents to access email, files, or cloud dashboards faces the same injection threats, regardless of geographic location. The universal nature of the internet means that agents interact with the same potentially compromised content, whether operated by a startup or a multinational enterprise. This broad exposure underscores the need for universal security protocols that can be adapted to various scales of operation. Smaller entities, often lacking dedicated cybersecurity resources, must prioritize basic safeguards like limiting agent permissions and enforcing human approvals. As threats continue to globalize, fostering international collaboration and information sharing will be crucial to staying ahead of malicious actors.

9. Operational Mechanics and Risk Points

Understanding how AI agents function reveals critical points of vulnerability in their workflow, which can have serious implications for system security. These agents typically receive a goal, plan a series of actions such as searching or filling out forms, read the results, and iterate based on new information. Each cycle of reading and acting presents an opportunity for hidden prompts to infiltrate the process. Malicious instructions can be embedded in web pages, code comments, user-generated content, or even file metadata, waiting to be parsed as legitimate guidance. If an agent misinterprets such content, it may execute unauthorized commands, compromising the integrity of the system. This iterative nature of agent operation necessitates continuous monitoring to catch and neutralize threats at every stage of interaction.

Long-running sessions exacerbate these risks by increasing the volume of content an agent processes over time, and the more data it reads, the higher the likelihood of encountering hostile instructions that accumulate as stacked rules. This prolonged exposure can lead to unexpected behaviors, especially if the agent lacks mechanisms to reset or filter out suspicious inputs. Vulnerable points are not limited to external content; internal data sources, if not properly secured, can also harbor threats. Security measures must therefore focus on scrutinizing every input, regardless of origin, and limiting session durations to minimize risk accumulation. By addressing these operational weak spots, organizations can better protect their systems from the subtle but pervasive dangers posed by AI agent interactions.

10. Navigating Compliance Pitfalls

AI agents are celebrated for their ability to streamline workflows by minimizing manual intervention, reducing clicks, and accelerating processes. However, this efficiency can inadvertently bypass essential human judgment, leading to compliance violations. For instance, an agent exporting a customer list without proper authorization might violate data protection policies or regulatory standards, exposing the organization to legal and financial penalties. The speed and autonomy that make agents appealing can thus become liabilities if not paired with oversight mechanisms. Balancing the drive for operational efficiency with adherence to compliance requirements is a pressing challenge, as unchecked automation risks undermining established protocols designed to safeguard sensitive information and operations.

The potential for non-compliance is particularly concerning in industries subject to strict regulations, such as finance or healthcare, where even minor oversights can have significant repercussions. An agent acting without approval might inadvertently share protected data or execute transactions that violate internal policies. To mitigate this, organizations must integrate approval checkpoints into agent workflows, ensuring that critical actions are reviewed by authorized personnel. Additionally, regular audits of agent activities can help identify and rectify compliance gaps before they escalate. By embedding compliance considerations into the design and deployment of AI agents, companies can harness their benefits while maintaining accountability and protecting against regulatory breaches that could damage trust and credibility.

11. Building Robust Defensive Strategies

Effective defense against AI agent vulnerabilities begins with thorough pre-deployment testing to ensure robust security measures are in place. Red team exercises that simulate hostile instructions—using hidden text, layout tricks, and multi-step lures—provide valuable insights into potential weaknesses. By documenting how agents respond to these simulated attacks, teams can adjust rules to block unauthorized actions. Vendor guidelines often support these efforts, offering frameworks for testing and mitigation. Additionally, protected execution tools verify the origin of commands, pausing actions from untrusted sources for review, while allowlists for sensitive tasks and real-time user prompts for financial or data movements add further layers of security. These combined approaches ensure that risks are identified and addressed before agents are fully integrated into critical systems.

Human oversight remains a cornerstone of defense, mandating approval for actions affecting finances, identity, or access rights, and ensuring that users must review and confirm or deny proposed actions to preserve efficiency while minimizing the risk of unexpected instructions. Limiting agent scope is equally critical; rather than relying on a single all-powerful agent, deploying multiple agents with restricted permissions reduces the impact of a single breach. Each agent should have only the access necessary for its specific tasks, preventing widespread exposure. These strategies collectively form a multi-faceted defense, combining proactive testing, real-time validation, and scoped permissions to safeguard against the evolving threats posed by AI agent misuse. Continuous refinement of these measures is essential to adapt to new attack vectors.

12. Implementing a Comprehensive Framework

A practical framework for securing AI agents encompasses people, processes, and technology, providing a comprehensive approach to safeguarding these systems from potential vulnerabilities and ensuring their safe integration into workflows. On the people front, staff training on prompt injection risks is vital, using concise, visual examples to illustrate how hidden text can manipulate agents. Designating an agent overseer for each use case ensures accountability, with responsibilities including log monitoring and approving critical actions. Encouraging a “pause and question” culture among users promotes vigilance, prompting them to seek a second opinion on risky agent suggestions. These human-centric measures foster a security-conscious environment, empowering employees to act as the first line of defense against potential threats while maintaining the operational advantages of automation in their daily tasks.

Process and technology components further strengthen this framework by providing essential safeguards and structure for secure operations. Approval checkpoints for payments, data exports, account changes, and third-party access must be role-specific and time-bound, while agent manuals should outline permitted domains, file types, and actions requiring human review. Session duration limits prevent lingering malicious instructions by resetting context after short runs. Technologically, tools to block untrusted command sources, alerts for sensitive site interactions, segmented agent responsibilities with minimal permissions, and detailed action logs reviewed weekly are essential. This triad of people, process, and technology creates a robust structure to mitigate risks, ensuring that AI agents operate within secure boundaries while supporting organizational goals.

13. Taking Immediate Action for Security

Organizations must act swiftly to secure AI agent deployments by starting with a comprehensive inventory of all agents and their access scopes, ensuring a thorough understanding of potential risks. Restricting permissions to the bare minimum necessary for each task reduces potential exposure. Human validation for high-risk actions, such as financial transactions, access modifications, and data transfers, is non-negotiable to prevent unauthorized operations. Leveraging vendor-provided controls to monitor instruction origins and flag interactions with sensitive sites adds an additional safety net. These immediate steps lay the groundwork for a secure environment, addressing the most pressing vulnerabilities while maintaining the functionality that makes AI agents valuable tools in modern workflows.

Regular testing is another critical action to sustain security over time, especially in the rapidly evolving landscape of cybersecurity threats. Conducting quarterly red team exercises with realistic prompts and hidden text helps identify emerging dangers and refine defenses accordingly. This iterative approach ensures that safeguards remain effective against new tactics employed by attackers. By combining inventory assessments, mandatory approvals, vendor tool utilization, and consistent testing, organizations can proactively manage risks associated with AI agents. These actions not only mitigate current threats but also build resilience against future challenges, fostering a culture of continuous improvement in cybersecurity practices tailored to the unique dynamics of automated systems.

14. Distinguishing Facts from Analytical Insights

The factual landscape surrounding AI agent risks is clear: these tools are susceptible to hijacking through prompt injection, which can occur via real-time prompts or content accessed online. Vendors are actively developing solutions to detect malicious commands and enforce supervision for critical actions, while experts consistently recommend human oversight and restricted agent capabilities to curb misuse. These established points provide a foundation for understanding the immediate challenges posed by AI agents in cybersecurity contexts. They highlight the tangible vulnerabilities that organizations must address to protect their systems, underscoring the urgency of implementing safeguards that align with current technological realities and expert guidance.

Analytically, the most significant shift is social rather than technical—AI agents lower the skill threshold for misuse, expanding the pool of potential attackers from expert hackers to opportunistic actors. This democratization of threat necessitates a defense strategy focused on limiting agent powers, integrating human checks, and meticulously tracking all actions to contain potential damage. Such measures preserve the benefits of automation while minimizing the blast radius of a breach. This perspective shifts the focus toward proactive cultural and procedural changes, recognizing that technology alone cannot fully address the evolving nature of cyber risks in an era of accessible automation tools.

15. Acknowledging Limitations and Future Challenges

Cybersecurity threats are a growing concern in today’s digital landscape, where hackers and malicious entities continuously target sensitive data and critical infrastructure. As technology advances, the sophistication of cyber attacks increases, posing significant risks to individuals, businesses, and governments alike. It is crucial to implement robust security measures, such as strong passwords, encryption, and regular software updates, to protect against these dangers. Staying informed about the latest threats and adopting best practices can help mitigate the potential damage caused by cybercriminal activities.