The rapid adoption of generative artificial intelligence (AI) across diverse industries such as healthcare, technology, and financial services has brought significant concerns to light. One pressing issue is the discrepancy in risk management among companies that are racing to implement AI solutions. A recent PwC survey found that only 58% of executives have conducted preliminary evaluations of AI-related risks, underscoring a potentially dangerous oversight.

The Urgency for Comprehensive AI Risk Evaluations

Functional Focus at the Expense of Risk Management

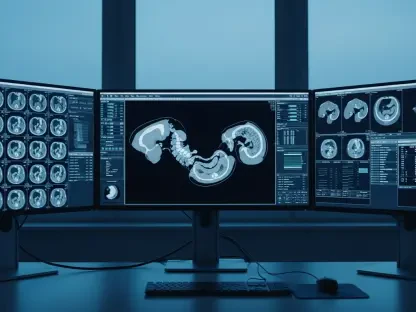

A report stemming from a survey conducted among 1,001 executives in April reveals that 73% of these leaders are either currently utilizing or planning to implement AI technologies. Despite this high adoption rate, there is a notable concern that many organizations are concentrating solely on functional applications. By doing so, they risk overlooking critical hazards that could impact their operations. PwC warns that businesses that delay comprehensive AI integration may find themselves struggling to maintain competitiveness as the technology landscape continues to evolve.

In July 2023, the World Economic Forum (WEF) echoed these concerns with research showing that three out of four Chief Risk Officers (CROs) recognize AI as a threat to corporate reputations. An astonishing 90% of these officers support stricter regulations on AI development and deployment. The sentiment among nearly half of the WEF survey respondents leans toward pausing AI advancements until potential risks are fully comprehended. These insights indicate a widespread acknowledgment of the need for more robust risk evaluation practices.

The ‘Opaque’ Nature of AI Algorithms

One of the primary concerns related to AI is the “opaque” nature of its algorithms, which can produce flawed decisions and lead to unintended data sharing. These opaque processes can make it challenging for organizations to understand how AI arrives at certain conclusions, thereby complicating risk management efforts. This opacity significantly heightens the need for thorough oversight across all stages of AI development and application. Without such measures, businesses may unknowingly expose themselves to severe vulnerabilities.

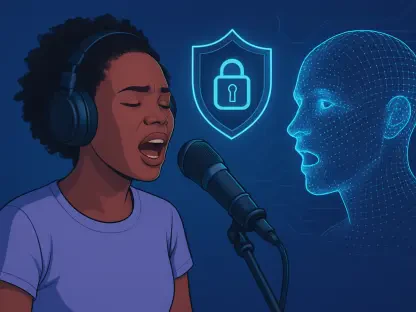

Furthermore, AI’s capability to generate synthetic or fake content is seen as a significant future threat. The potential for AI to produce misleading information, disrupt economies, and even create social divisions cannot be ignored. This adds another layer of complexity to the already intricate risk landscape, emphasizing the need for continuous commitment to managing AI-related risks effectively.

Striking a Balance Between Innovation and Safety

The Imperative of Integrating Risk Oversight

PwC underscores that managing AI risks requires an ongoing commitment to integrating risk oversight throughout the development and application stages. This involves not just initial evaluations but continuous monitoring and updating of risk management frameworks as AI technologies evolve. By institutionalizing a culture of risk awareness and management, enterprises can better prepare themselves to navigate the uncertainties brought about by AI innovations.

Such diligence is particularly crucial as organizations strive to balance the drive for innovation with the need to mitigate associated risks. The pressure to harness the capabilities of AI while ensuring security is mounting, compelling businesses to adopt more stringent risk management practices. This equilibrium will be essential for companies aiming to remain competitive while safeguarding their operations and reputations.

Future Projections and Current Practices

The swift integration of generative artificial intelligence (AI) across various sectors like healthcare, technology, and financial services has unveiled significant concerns. One major issue is the inconsistency in risk management approaches among companies eager to deploy AI solutions. A recent survey by PwC highlighted that a mere 58% of executives have conducted preliminary evaluations of the risks associated with AI, revealing a potentially hazardous gap in oversight.

Generative AI, which can create new content, offers vast benefits but also introduces unique challenges. These challenges include data privacy issues, ethical considerations, and the potential for misuse. As firms rush to harness AI’s capabilities, many overlook the importance of a rigorous risk assessment process. This neglect could lead to unintended consequences, such as security breaches or flawed decision-making processes due to AI errors.

Additionally, the regulatory landscape for AI is still evolving, adding another layer of complexity. Companies must navigate these uncertainties while ensuring that their AI systems are robust, ethical, and secure. The PwC survey serves as a wake-up call, emphasizing the need for comprehensive risk management strategies as AI continues to reshape industries.