The advent of quantum computing poses a significant threat to current encryption protocols, prompting the National Institute of Standards and Technology (NIST) to develop new post-quantum cryptography (PQC) standards. These standards aim to protect sensitive information from the potential capabilities of quantum computers. As institutions worldwide prepare for this quantum future, the question arises: Are NIST’s new encryption standards truly ready for quantum computing?

The Quantum Threat Landscape

Understanding Quantum Computing

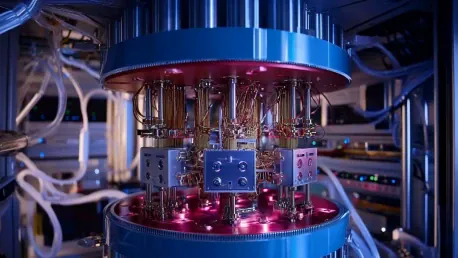

Quantum computing represents a paradigm shift in computational power, leveraging the principles of quantum mechanics to solve problems that are currently intractable for classical computers. Unlike classical computers that use bits to process information in a binary state of 0s and 1s, quantum computers utilize qubits, which can exist simultaneously in multiple states due to superposition. This intrinsic property allows quantum computers to perform many calculations in parallel, vastly outpacing their classical counterparts.

Furthermore, quantum entanglement, another cornerstone of quantum mechanics, enhances computational prowess. When qubits become entangled, the state of one qubit instantaneously affects the state of another, regardless of distance. Such capabilities enable quantum computers to methodically tackle complex problems across various fields, including cryptography. However, these same attributes that empower quantum computing also pose a severe threat to current cryptographic systems designed under classical computational assumptions.

Shor’s Algorithm and Its Implications

In 1994, mathematician Peter Shor introduced an algorithm capable of factoring large integers exponentially faster than the best-known classical algorithms. This breakthrough highlighted the vulnerability of widely used cryptographic systems, such as RSA and ECC, which rely on the difficulty of factoring large numbers. The potential of quantum computers to execute Shor’s algorithm necessitated the development of quantum-resistant encryption methods.

Shor’s algorithm fundamentally disrupts several encryption schemes by exploiting quantum computational power to decrypt data that would otherwise be secure. The RSA algorithm, widely implemented to secure sensitive information in communication, e-commerce, and digital signatures, relies on the hard problem of factoring large composite numbers. Shor’s algorithm, with the help of a sufficiently powerful quantum computer, can factor these numbers and break the RSA encryption, making previously secure communications vulnerable. As the feasibility of building large-scale quantum computers inches closer to reality, the urgency for post-quantum cryptographic solutions becomes more pronounced, requiring immediate action from leading institutions across the globe.

NIST’s Post-Quantum Cryptography Standards

The Development Process

NIST’s journey to establish PQC standards began with a global call for submissions in 2016, inviting cryptographers to propose algorithms resistant to quantum attacks. This section outlines the rigorous evaluation process, including multiple rounds of scrutiny and testing that led to the selection of the final algorithms. The collaborative effort involved contributions from academia, industry, and government agencies. The diversity of participation in this process underscores the global recognition of the quantum threat and the collective effort required to develop robust solutions.

During the initial phase, numerous cryptographic algorithm proposals were submitted and evaluated based on their security, performance, and implementation characteristics. This process included exhaustive testing and cryptanalysis to pinpoint any potential vulnerabilities. Over several years, the field was narrowed through multiple rounds, each more rigorous than the last, ultimately leading to the selection of the most promising candidates. These selected algorithms are now considered foundational for future cryptographic practices, designed to withstand the high computational power of quantum threats and fortified through comprehensive, peer-reviewed analyses.

Lattice-Based Algorithms

Among the selected algorithms, lattice-based cryptography emerged as a promising candidate due to its presumed resistance to quantum attacks. This section explores the principles behind lattice-based algorithms, such as ML-KEM and ML-DSA, and their historical resilience against cryptographic attacks. The discussion includes an overview of how these algorithms leverage the complexity of lattice problems to ensure security. Lattice-based cryptography methods focus on problems where solutions require traversing intricate and high-dimensional lattice structures, proving extremely challenging even for quantum computers.

Lattice-based problems, particularly those employed in NIST’s standards, involve constructing specific lattices that are fundamentally difficult to decipher without the correct private key. These methods exploit properties like the hardness of finding short vectors within high-dimensional lattices, a task that remains resilient against known quantum algorithms. The robust nature of lattice problems ensures that attackers, even with advanced quantum capabilities, would face prohibitive computational costs. As such, lattice-based cryptographic schemes offer a plausible path forward in developing quantum-resistant security measures, driving momentum towards adopting these standards globally.

Security Assumptions and Proofs

Proof by Reduction

The security of NIST’s PQC standards is underpinned by theoretical proofs known as “proof by reduction.” This section explains how these proofs work, demonstrating that breaking the PQC algorithms would be as difficult as solving the underlying lattice problems. The discussion highlights the importance of these proofs in establishing confidence in the new standards. In essence, ‘proof by reduction’ entails showing that any efficient algorithm compromising the security of the cryptographic protocol can be transformed into an efficient algorithm solving a recognized hard problem.

Proof by reduction gives a formal and structured assurance that if the underlying mathematical problem (e.g., lattice problem) is computationally hard for quantum adversaries, then so is breaking the cryptographic scheme derived from it. For instance, if an attacker devises a method to overcome the encryption, that same method can be repurposed to solve the original lattice problem, which is currently deemed intractable. This logical chain reinforces the belief in the security of lattice-based PQC algorithms, promoting broader acceptance and implementation within sectors handling critical and sensitive information.

The Role of Hash Functions

Hash functions play a crucial role in many cryptographic schemes, including PQC. This section delves into the reliance on collision-resistant hash functions and the assumptions made by the random oracle model (ROM). While practical hash functions like SHA3-256 do not produce truly random outputs, ROM provides a useful heuristic for designing security proofs. Collision resistance ensures that two different inputs will almost inevitably generate distinct hash outputs, a property integral to maintaining data integrity and security.

In the context of post-quantum cryptography, the validity and strength of hash functions are paramount. ROM posits that hash function outputs behave like random functions even when, in practice, they might not be purely random. Despite this approximation, ROM has remained a valuable framework for proving the resilience of cryptographic schemes under theoretical quantum attacks. Critics often note the limitations of ROM, however, its contributions to understanding and fortifying PQC standards are significant, informing ongoing research into more sophisticated models and refinements suitable for quantum adversaries.

Addressing Quantum Adversaries

Quantum Random Oracle Model (QROM)

To better address potential quantum threats, researchers developed the Quantum Random Oracle Model (QROM). This section explains how QROM refines assumptions to consider quantum attackers who could exploit hash functions in ways classical attackers cannot. The discussion includes the theoretical nature of QROM and its implications for assessing the security of PQC algorithms. Unlike conventional ROM, QROM adjusts the assumptions, taking into account the distinctive ways quantum computers process information and break hash functions.

QROM models the interactions between cryptographic protocols and potential quantum adversaries more precisely. By simulating these scenarios, researchers can evaluate the robustness of PQC algorithms against hypothetical quantum capabilities. Although theoretical, QROM serves as a crucial tool in predicting vulnerabilities, guiding cryptographers in designing and refining algorithms to enhance their resistance. These anticipatory measures ensure that the transition to post-quantum cryptography aligns with anticipated technological advancements, ensuring long-term security and trustworthiness of encryption protocols.

Enhancements and Ongoing Research

The field of post-quantum cryptography is continually evolving, with researchers exploring enhancements and alternative solutions to bolster security. This section highlights ongoing efforts to identify potential weaknesses in lattice-based algorithms and the search for new cryptographic methods. The discussion underscores the importance of vigilance and adaptability in the face of emerging quantum threats. The dynamic nature of cryptographic research demands continuous scrutiny and refinement, ensuring that PQC standards remain robust amidst evolving quantum capabilities.

Current research initiatives focus on hybrid cryptographic schemes that integrate traditional and quantum-resistant elements, providing a transitional framework while quantum technologies mature. Simultaneously, researchers are exploring alternative cryptographic foundations beyond lattices, such as code-based, multivariate, and hash-based methods. These innovations offer diversification in encryption strategies, mitigating risks associated with any single approach. As quantum research progresses, these diversified cryptographic approaches collectively fortify the security infrastructure, demonstrating a commitment to proactive and adaptive measures.

Practical Implications for Cybersecurity

Adoption of PQC Standards

Institutions dealing with highly sensitive information are encouraged to adopt NIST’s PQC standards as a proactive measure against quantum threats. This section discusses the practical steps organizations can take to integrate these standards into their security infrastructure. The discussion includes considerations for implementation, potential challenges, and the benefits of early adoption. Transitioning to PQC frameworks involves systematic steps, encompassing thorough evaluations, seamless integration, and rigorous testing phases to ensure efficiency and reliability.

Organizations must assess their current cryptographic practices, identify vulnerabilities, and prioritize areas requiring immediate post-quantum upgrades. Collaboration with cryptographic experts and adherence to recommended protocols enhance the transition’s effectiveness. Challenges include managing compatibility issues, training personnel in new cryptographic techniques, and ensuring that the deployed PQC mechanisms align with sector-specific regulatory requirements. Early adoption provides resilience against quantum threats, safeguarding critical data and maintaining trust among stakeholders while leveraging cutting-edge cryptographic advancements.

Confidence Amid Uncertainty

While there is considerable confidence within the cryptographic community regarding the security of lattice-based problems, there is no definitive proof that these problems will remain invulnerable to quantum advancements. This section explores the balance between confidence and uncertainty, emphasizing the need for ongoing research and preparedness in the cybersecurity landscape. The inherent uncertainties surrounding the full potential of quantum computing necessitate a cautious yet proactive approach, continuously seeking to fortify cryptographic defenses.

Repeated assessments and iterative improvements help bridge gaps in existing knowledge, ensuring that cryptographic systems stay resilient. Cross-disciplinary collaborations and fostering a culture of knowledge sharing within academia and industry are key to addressing these uncertainties. As quantum computing continues to evolve, so too must the cryptographic frameworks that underpin secure communications. By balancing confidence with vigilance, the cybersecurity community can navigate the unpredictable quantum landscape, ensuring robust defense mechanisms for future digital threats.

Conclusion

Quantum computing is emerging as a formidable technology that could potentially compromise current encryption protocols, leading the National Institute of Standards and Technology (NIST) to create new post-quantum cryptography (PQC) standards. These updated standards are designed to safeguard sensitive data against the anticipated power of quantum computers, which could theoretically break existing encryption methods. As global institutions gear up for this quantum era, an important question looms large: Are NIST’s newly formulated encryption standards genuinely ready to withstand the challenges posed by quantum computing? The urgency of assessing and implementing these standards is paramount, given the rapid advancements in quantum technology. As the quantum landscape evolves, the ongoing scrutiny and enhancement of PQC standards will be critical in ensuring robust security. Moreover, the collaboration of international experts and continuous testing will play a vital role in addressing any vulnerabilities. The readiness of these standards is essential for maintaining data integrity and security in an increasingly quantum-driven future.