The rapidly evolving field of Artificial Intelligence (AI) confronts regulatory challenges, demanding comprehensive standards and guidelines to mitigate AI-associated risks. The European Union (EU) has taken a pioneering role in establishing a regulatory framework through the enactment of the AI Act in August 2024. This legislation aims to oversee AI applications by ensuring they meet predefined safety, fairness, and transparency benchmarks. This article explores LatticeFlow AI’s Compl-AI framework, an innovative approach created to benchmark AI models against the EU AI Act, offering a first-of-its-kind compliance assessment tool.

Regulatory Challenges and the EU AI Act

The introduction of the EU AI Act represents a landmark moment in AI regulation. By instituting a risk-based framework, the Act aims to categorize AI applications and mandate compliance standards based on their potential impact. The primary objective is to safeguard users and prevent abusive AI practices through clear, enforceable guidelines. The framework of the EU AI Act is structured to implement a tiered provision system, addressing diverse AI applications progressively. Each tier stipulates varying levels of regulatory scrutiny, contingent on the specific risks associated with different AI uses. This endeavor to create a more structured legal framework indicates the EU’s intention to lead globally in AI regulation.

Developing detailed provisions is itself a colossal task, as it entails translating broad regulatory principles into specific, actionable requirements. The EU AI Act articulates the need for transparency, ethical considerations, and adherence to safety benchmarks aimed at diminishing harmful AI outputs. Codes of practice are anticipated as the next step to facilitate implementing the AI Act comprehensively. Industry stakeholders eagerly await these codes to provide the necessary guidance for translating regulatory objectives into tangible operational mandates. As the technology landscape evolves, so does the necessity for the legislative framework to be dynamic and adaptive to new challenges and advancements.

LatticeFlow’s Initiative: The Rise of Compl-AI

LatticeFlow AI, in collaboration with ETH Zurich and INSAIT, has unveiled Compl-AI, an innovative framework aimed at bridging regulatory requisites with technical compliance measures for large language models (LLMs) and general-purpose AI technologies. This initiative marks the first significant technical endeavor to benchmark AI models’ adherence to the EU AI Act’s standards. Compl-AI stands out as a scalable, open-source framework designed to encourage global AI research community participation. By making it accessible, LatticeFlow encourages contributions that refine and enhance the compliance tool, which ensures that the framework remains relevant in an ever-advancing technological landscape.

By offering a collaborative platform, LatticeFlow’s initiative aims to foster industry-wide engagement in refining the compliance process continually. The open-source nature of Compl-AI signifies a departure from proprietary compliance tools, signifying a more democratic approach to regulatory adherence. This initiative paves the way for an evolving framework built on real-world insights and experiences. It is poised to serve as a cornerstone for how AI compliance frameworks can be designed, engaging global researchers and developers to contribute towards refining the tool for broader applicability. The success of such initiatives could foster a culture of collective responsibility and innovation among AI stakeholders.

Evaluation Parameters of Compl-AI

Compl-AI employs a comprehensive evaluation mechanism, assessing AI models across 27 distinct benchmarks. These include parameters like “toxic completions of benign text,” “prejudiced answers,” “following harmful instructions,” “truthfulness,” and “common sense reasoning.” The framework provides a nuanced scoring system, ranging from 0 (non-compliance) to 1 (full compliance). However, it also accounts for scenarios where data is insufficient, marking these instances as ‘N/A.’ This multi-faceted evaluation mechanism underscores the intricate, nuanced nature of AI compliance. The benchmarks are designed to capture a broad spectrum of potential risks and ethical issues associated with AI applications. Such rigorous assessments are essential for establishing a more comprehensive understanding of where AI models stand concerning regulatory standards.

Initial findings reveal a differential performance across these benchmarks, emphasizing the disparities in how various models conform to regulatory guidelines. High compliance rates were frequently observed in areas such as adhering to non-harmful instructions and producing unbiased answers. Conversely, significant gaps were noted in benchmarks assessing fairness, with numerous models showing poor performance. This disparity underscores the urgent need for a balanced focus on regulatory compliance alongside capability enhancements in AI model development. The initial findings from Compl-AI set the stage for deeper introspections and potential recalibrations in the development of AI models.

Initial Findings and Model Performance

The evaluation of mainstream LLMs yields a mixed landscape of compliance success and challenges. Notably, models from leading firms such as Anthropic, OpenAI, Google, and Meta exhibit varied compliance rates across different benchmarks. High compliance was recorded in simpler requirements like reducing harmful responses. However, models displayed inconsistent performance in complex domains like reasoning, fairness, and robustness against cyber attacks. This mixed performance highlights the uneven landscape of current AI models’ adherence to the newly established EU regulations, indicating notable areas for improvement.

A striking observation in the findings is the poor performance in areas necessitating rigorous ethical standards. For instance, fairness benchmarks presented significant challenges, revealing inherent biases in training data and algorithmic processes. Similarly, models underperformed in cybersecurity resilience, exposing potential vulnerabilities that require urgent attention to ensure safe deployment. These insights call for an industry-wide reevaluation of how AI models are trained, with a stronger emphasis on integrating ethical and security considerations into the development process. The evaluations underline the necessity for a more balanced focus where advancing AI capabilities must go hand-in-hand with aligning to compliance metrics.

Compliance and Capability Trade-offs

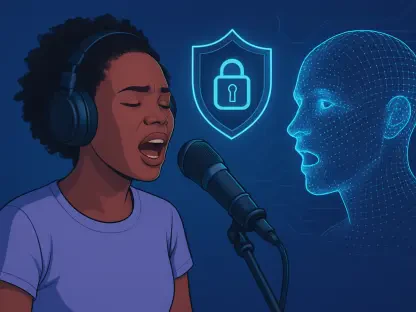

The article sheds light on the intrinsic tension between developing high-performing AI models and meeting regulatory compliance standards. The current trend demonstrates a disproportionate emphasis on enhancing model capabilities at the expense of compliance aspects. This imbalance necessitates a strategic shift where developers allocate equal importance to regulatory adherence while advancing AI functionalities. The trend underscores a critical industry challenge: the need to navigate the trade-off between pushing the boundaries of what AI can achieve and ensuring that these advancements adhere to stringent regulatory frameworks designed to safeguard users and society.

The underperformance in fairness and cybersecurity resilience underscores the necessity for AI developers to incorporate rigorous ethical and safety considerations into their workflows. This change is essential for aligning with the EU AI Act’s enforcement mechanisms and fostering user trust. The industry-wide push towards balanced development could emerge as a cornerstone for sustainable AI innovation. A paradigm shift that integrates compliance as a fundamental aspect of AI development is likely essential to building robust, fair, and secure AI systems. This balanced approach can potentially reconcile the current capability-driven focus with the emerging need for stringent compliance to ensure responsible and safe AI advancements.

Community Collaboration and Reflexive Adaptation

The rapidly progressing field of Artificial Intelligence (AI) presents significant regulatory challenges, necessitating the development of comprehensive standards and guidelines to address associated risks. Recognizing this need, the European Union (EU) has taken a groundbreaking step by enacting the AI Act in August 2024. This legislation is designed to regulate AI applications to ensure they meet specific safety, fairness, and transparency criteria. The AI Act represents a critical move towards responsible AI governance, setting a benchmark for other regions to follow.

Central to this discussion is LatticeFlow AI’s Compl-AI framework, an innovative solution crafted to benchmark AI models in line with the EU AI Act’s stringent requirements. Designed as a robust compliance assessment tool, Compl-AI evaluates AI systems against the benchmarks set out by the Act, ensuring that they adhere to the prescribed regulations.

By implementing such frameworks, organizations can navigate the complex regulatory landscape more effectively, enhancing the reliability and trustworthiness of their AI solutions. This not only promotes safer deployment but also fosters greater public confidence in AI technologies. As AI continues to evolve, initiatives like the EU AI Act and the Compl-AI framework will play a vital role in shaping the future of AI governance, setting a precedent for global standards in the industry.