In the rapidly evolving landscape of software development, AI tools and open-source software have emerged as significant forces that have democratized coding and greatly enhanced productivity. These technological advancements have made complex coding tasks more accessible and efficient for developers of varying skill levels. However, this democratization has also introduced new security challenges that organizations must carefully navigate. By comparing the adoption of AI-assisted development with the historical embrace of open-source software, we can gain deeper insights into their impact on security practices and explore effective strategies to address these challenges.

The Rise of AI Tools in Software Development

AI tools have fundamentally transformed software development by automating intricate coding tasks, reducing human error, and accelerating productivity. These tools employ sophisticated machine learning algorithms to assist developers in writing, debugging, and optimizing code, which has led to their widespread adoption. Indeed, over 90% of organizations have integrated AI tools into their development processes, mirroring the adoption trends seen when open-source software first revolutionized the industry. This shift has democratized software development, making it more inclusive and collaborative.

Despite the clear efficiencies AI tools bring, they are not without significant drawbacks, particularly in terms of security. One of the primary concerns is that AI-generated code can introduce security vulnerabilities. Studies have identified a concerning trend where developers who utilize AI assistants are more prone to producing code laden with security flaws. This risk underscores the necessity of rigorous security reviews and the implementation of best practices to mitigate potential pitfalls associated with AI-generated code. The automation that promises to streamline development can unwittingly escalate the risk of security breaches if not properly managed.

Security Challenges of AI-Generated Code

The integration of AI tools in software development has undoubtedly accelerated the coding process, but it has also ushered in new security challenges that require careful attention. Unlike human-generated code, which typically undergoes multiple layers of scrutiny, AI-generated code might bypass traditional review mechanisms. This oversight increases the risk of introducing security vulnerabilities directly into the development pipeline. The necessity for a robust security review framework becomes critical to ensure these vulnerabilities are identified and addressed promptly.

Another prevalent issue is the potential for developers to overlook licensing obligations when employing AI tools. The use of open-source components in AI-generated code necessitates careful management of intellectual property (IP) rights, complicating the security landscape further. Failure to comply with licensing requirements can lead to legal complications, putting organizations at risk. Therefore, it is imperative for organizations to enforce stringent license compliance protocols and educate developers about their responsibilities when integrating AI tools in their workflows. Establishing clear policies around the use and licensing of AI-generated code is essential to mitigate these risks.

The Proliferation of Security Testing Tools

As organizations strive to bolster their software security, the proliferation of security testing tools has emerged as a double-edged sword. While these tools are indispensable for identifying vulnerabilities, their sheer number can overwhelm development teams, creating an excess of “noise” or irrelevant results. It is not uncommon for organizations to employ between 6 and 20 different security testing tools, a practice that can significantly bog down security teams. The influx of unnecessary data makes it challenging to isolate genuine threats among the flood of inconsequential findings.

This issue is exacerbated by the fact that 60% of respondents report that over 20% of their security test results are noise. The sheer volume of false positives and irrelevant alerts can desensitize teams, causing critical vulnerabilities to be overlooked. To address this, organizations need to streamline their security testing processes by consolidating tools and focusing on those that provide the highest value. By minimizing reliance on numerous disparate tools, organizations can improve efficiency, reduce the burden on their security teams, and ensure that critical vulnerabilities are promptly addressed.

Balancing Security and Development Speed

One of the most pressing challenges in contemporary software development is finding the equilibrium between rigorous security testing and maintaining development speed. The widespread acknowledgment that security testing slows down development is evident, with over 86% of respondents expressing this concern. This tension is especially pronounced in organizations that rely heavily on manual processes for managing security testing queues, which can significantly impede development timelines.

To alleviate this bottleneck, many organizations are turning to automation. Automated security testing solutions can streamline the process of managing and analyzing test results, reducing the burden on security teams and enabling quicker identification of vulnerabilities. By automating these tasks, organizations can maintain a balance between thorough security testing and rapid development cycles. Embracing automation allows for faster iteration and deployment while ensuring that security is not compromised, ultimately facilitating a more efficient development process.

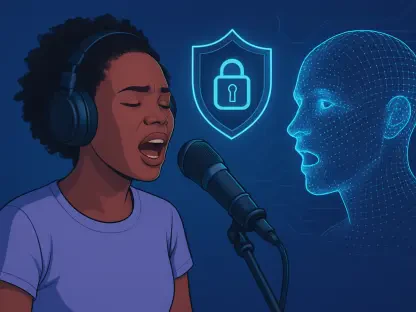

Establishing Governance for AI Tools

The rapid rise in AI tool usage necessitates the establishment of comprehensive governance frameworks to ensure their effective and secure deployment. Developing clear policies and procedures for the use of AI tools is paramount. This includes creating guidelines that address licensing requirements, security review processes for AI-generated code, and the potential IP challenges associated with these tools. Establishing such a governance framework provides developers with the necessary resources and protocols to navigate the complexities of using AI in coding responsibly.

Investment in tools specifically designed to vet and secure AI-generated code is another critical aspect of governance. These specialized tools can help identify potential vulnerabilities early in the development process, ensuring that AI-generated code adheres to security standards. By implementing a robust governance framework, organizations can mitigate the risks associated with AI tools while leveraging their benefits effectively. This strategic approach not only enhances security but also fosters a culture of responsible AI use within the development community.

Conclusion

In the ever-evolving field of software development, the rise of AI tools and open-source software has been transformative, significantly democratizing coding and boosting productivity. These innovations have simplified complex coding tasks, making them more accessible and efficient for developers with a wide range of skill levels. Despite these advantages, this democratization brings new security challenges that organizations need to manage carefully. By looking at how AI-assisted development is being adopted today and comparing it to the historical adoption of open-source software, we can gain valuable insights into their impact on security practices. Exploring this comparison allows us to uncover effective strategies to address the emerging security issues. In essence, while AI tools and open-source software have brought numerous benefits, they also require us to find new ways to ensure our security measures evolve alongside them. Balancing innovation with security is crucial for leveraging these technologies’ full potential while safeguarding the integrity of our development environments.