Diving into the future of virtual and augmented reality, we’re thrilled to speak with Oscar Vail, a technology expert renowned for his pioneering work in emerging fields like quantum computing, robotics, and open-source projects. Today, we’ll explore his insights on HandProxy, an innovative voice-controlled digital hand designed to revolutionize user interaction in immersive environments. Our conversation touches on the inspiration behind this cutting-edge tool, its practical applications, the role of AI in enhancing its capabilities, and its potential to make VR and AR more accessible and convenient for diverse users.

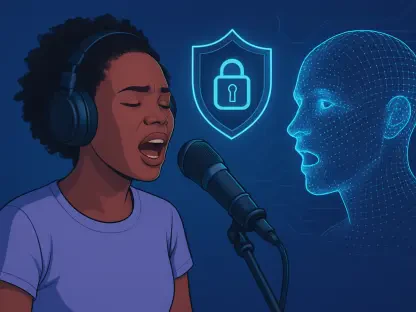

How did the concept of HandProxy come about, and what gap in virtual and augmented reality did you aim to address with a voice-controlled digital hand?

The idea for HandProxy stemmed from a growing need to make VR and AR more inclusive and versatile. We noticed that traditional hand-tracking methods, like gloves or cameras, while immersive, often exclude certain users—whether due to physical limitations, cramped spaces, or multitasking scenarios. I wanted to create a solution that didn’t rely solely on physical gestures. By integrating voice control, we could offer a hands-free alternative that allows users to interact with digital spaces effortlessly, opening up these technologies to a broader audience.

Can you walk us through how HandProxy functions in a virtual environment and what sets it apart from other interaction methods?

HandProxy operates as a disembodied virtual hand that responds to voice commands, mimicking the actions a user’s real hand might perform. You can tell it to grab objects, resize windows, or even gesture—like giving a thumbs up. What makes it unique is its flexibility compared to traditional methods. Unlike gloves or cameras that require physical movement, HandProxy is entirely voice-driven, and it integrates seamlessly with existing VR and AR apps by sending the same digital signals as a tracked hand. This means developers don’t need to build special features just for our system—it just works.

What are some of the most exciting tasks HandProxy can handle in a virtual space?

I’m really excited about the range of tasks HandProxy can tackle. For simpler actions, users can say things like “move that box to the left” or “enlarge this window,” and the hand executes it instantly. But what’s truly impressive is its ability to handle more complex, high-level instructions. For instance, saying “clear the table” prompts the hand to figure out the sequence of actions needed without step-by-step guidance. It can interpret the context and act autonomously, which is a game-changer for usability in immersive environments.

How does AI play a role in making HandProxy so adaptable to different user commands?

AI is at the heart of HandProxy’s adaptability, particularly through advanced models that power its language understanding. This technology allows the hand to interpret not just rigid, predefined commands but also more natural, conversational instructions. It can parse intent even when a user’s wording isn’t exact, making interactions feel intuitive. This level of flexibility sets it apart from earlier VR voice systems, which were often limited to basic menu navigation. With AI, HandProxy feels more like a helpful agent than a rigid tool.

Who do you see as the primary beneficiaries of this technology in VR and AR settings?

HandProxy has the potential to benefit a wide range of users. For individuals with motor impairments or disabilities like muscular dystrophy, it offers a way to engage with virtual spaces without the physical strain of gestures. It’s also a boon for people in small spaces who can’t make broad movements, or for multitaskers—think someone cooking while navigating an AR display. The hands-free aspect removes barriers, making these technologies more approachable and comfortable for anyone who finds traditional controls cumbersome.

What kind of feedback did you receive from users who tested HandProxy, and did anything in their reactions surprise you?

The feedback from our test participants was incredibly encouraging. Many loved how intuitive it felt to just speak naturally to the virtual hand, almost like chatting with a friend. What caught us off guard was how some users envisioned using it for abstract, non-physical tasks. They suggested ideas like having the hand “organize my workspace” by sorting digital windows autonomously. That opened our eyes to the potential of HandProxy evolving into more of a virtual assistant beyond just mimicking hand actions.

What were some of the biggest hurdles in developing HandProxy, particularly around interpreting voice commands?

One of the toughest challenges was ensuring HandProxy accurately understands user intent, especially with ambiguous language. There were instances where it misinterpreted commands—like when a user mentioned a “brown object” and the system didn’t connect it to the intended item, or struggled with phrases like “like the photo” for pressing a button. We’re actively refining the system to better handle unclear speech, exploring ways for it to ask clarifying questions without overstepping or assuming too much. It’s a delicate balance, but we’re making progress.

Looking ahead, what is your forecast for the future of voice-controlled interactions like HandProxy in immersive technologies?

I believe voice-controlled interactions will become a cornerstone of VR and AR as we strive for more natural and accessible user experiences. With advancements in AI, these systems will get even better at understanding context and nuance, making interactions seamless. I foresee a future where tools like HandProxy not only assist with specific tasks but also anticipate user needs, acting as intelligent companions in virtual spaces. The potential to democratize access to these technologies is huge, and I’m excited to see how this field evolves over the next decade.