The silent language of a glance, a nod, or a simple wink is now being translated into digital action, thanks to a pioneering artificial intelligence that promises to restore independence to those with severe motor impairments. This breakthrough software, developed at Quinnipiac University, transforms a standard webcam into a sophisticated controller, allowing users to navigate computers, communicate with loved ones, and even operate motorized wheelchairs using only their facial movements. This is not a futuristic concept from a distant lab; it is a tangible solution, born from a moment of profound empathy, designed to bridge the gap between human intent and digital command, making technology accessible to all.

The New Hands-Free World: Beyond Science Fiction

What if the subtle movements of your face—a nod, a glance, a wink—could navigate not just a conversation, but the digital world itself? A new technology is turning this concept into a practical reality, offering a lifeline of independence to those who need it most. This hands-free input system, named AccessiMove, represents a significant leap forward in assistive technology, moving beyond cumbersome or specialized hardware. It aims to democratize control by leveraging the devices people already own, such as laptops and tablets, to create a seamless and intuitive user experience.

The core premise is to empower individuals by turning their own expressions into a powerful interface. For someone with a significant motor impairment, this can mean the difference between isolation and connection. The ability to browse the internet, send an email, or participate in a video call without assistance restores a fundamental sense of agency. This technology is not merely about convenience; it is about rebuilding pathways to communication, education, and personal freedom that were previously blocked by physical limitations.

A Spark of Empathy: The Human-Centered Mission Behind the Tech

The project’s origin was not in a corporate boardroom but at an occupational therapy conference three years ago, where Chetan Jaiswal, an associate professor of computer science, witnessed firsthand the communication barriers faced by individuals with motor impairments. Seeing a young man struggle to interact with the world around him ignited a core philosophy that became the project’s guiding principle: technology’s greatest purpose is to serve humanity. This initiative stands in contrast to the profit-first narrative of the tech industry, focusing instead on developing accessible, life-changing tools that bridge the gap between ability and technology.

This human-centered mission catalyzed the formation of an interdisciplinary team. Jaiswal joined forces with occupational therapy expert Karen Majeski and computer scientist Brian O’Neill, alongside dedicated students. Their collaboration culminated in the university’s first patented software of its kind, a testament to what can be achieved when academic expertise is driven by a compassionate goal. The project’s development was rooted in understanding the user’s lived experience, ensuring the final product would be a practical tool for daily life rather than a mere technological curiosity.

How It Works: Translating Facial Expressions into Digital Commands

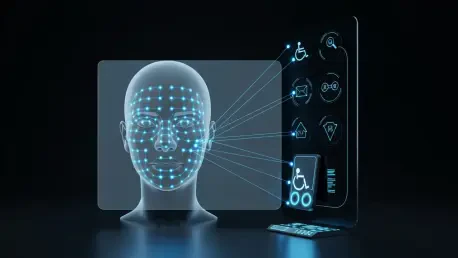

At its heart, the software transforms a standard webcam into a sophisticated control interface without needing any specialized, expensive hardware. It operates on a foundation of three key AI-driven techniques that work in concert to interpret a user’s intent. The system is designed for immediacy and precision, translating subtle facial cues into concrete digital actions in real time, making interaction feel natural and responsive.

The first technique is facial-landmark tracing. The AI identifies and tracks specific points on the user’s face, such as the bridge of the nose. By following the movement of this central point, the system allows a user to guide a mouse cursor across the screen with simple, intuitive head movements. Complementing this is head-tilt detection, where deliberate tilts—up, down, left, and right—are mapped to specific, customizable commands. A user could, for example, tilt their head up to launch a web browser or tilt right to restart the computer. Finally, gesture recognition translates simple actions like an eye blink or a wink into discrete inputs, such as a mouse click or a keyboard command, enabling users to type on a virtual keyboard or select icons with ease.

The Minds Behind the Movement: Expert Insights on Development and Design

The interdisciplinary team from Quinnipiac University stresses that artificial intelligence is the “indispensable engine” that makes this real-time facial tracking and command translation possible. According to the developers, a key design principle was to leverage the “ubiquitous webcams already built into most laptops, tablets, and phones” to ensure maximum accessibility and eliminate the need for costly equipment. This approach democratizes the technology, making it available to anyone with a standard computer.

Rigorous trials confirmed the system’s robustness, proving effective even for users wearing glasses or those with a limited range of motion. A critical feature, highlighted by occupational therapy experts on the team, is its ability to be calibrated to an individual’s unique physical capabilities. This ensures the technology adapts to the user, not the other way around. Professor Majeski confirmed that early tests demonstrated its effectiveness even with physical limitations like a stiff neck, underscoring the software’s adaptability and focus on individual user needs.

Unlocking Potential: Diverse Applications Across Health, Education, and Gaming

The software’s impact extends far beyond basic computer navigation, offering transformative solutions for a wide range of users. Its most immediate application is in providing personal independence, empowering individuals with significant motor impairments to browse the internet, communicate with family, and regain control over their digital lives. In the realm of mobility, the system can be configured to allow users to control motorized wheelchairs with intuitive facial gestures, such as looking up to move forward and down to reverse, providing newfound autonomy in physical spaces.

The applications continue into clinical and educational settings. For patients in hospitals or long-term care facilities who are unable to speak or use their hands, AccessiMove offers a vital method to communicate their needs. In education, it creates opportunities for “gaming literacy for learning,” enabling children with mobility challenges to engage with educational software and even adapt toys for facial control. Furthermore, the technology introduces novel hands-free interaction for the mainstream gaming industry, making strategy, simulation, and narrative-driven games more inclusive for all players.

From University Lab to Public Launch: The Strategy for a Wider Reach

The team is now focused on the crucial transition from a patented university project to a widely available tool. Their strategy involves actively seeking collaborators and investors, with a particular focus on the robust healthcare industry and major hospitals like Yale Hospital and Hartford Hospital. Securing funding is the critical next step to refine the software into a polished, user-friendly product ready for market. The developers are driven by a desire to partner with organizations committed to making a tangible difference in the lives of patients.

The ultimate vision is two-fold and reflects the project’s foundational ethos. First, the goal is to provide a powerful, potentially open-source tool for those who rely on assistive technology, ensuring that cost is not a barrier to access. Second, the team envisions it becoming a convenient, everyday option for anyone seeking a hands-free way to interact with their devices, from professionals multitasking in an office to technicians in the field. This dual approach aims to fulfill both a critical human need and a broader market convenience, solidifying its place as a truly versatile technology for the future.