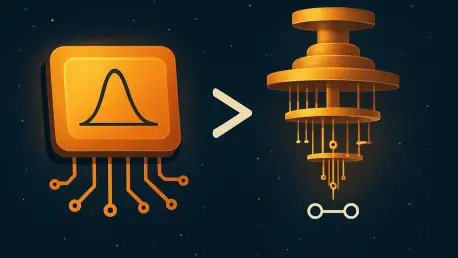

In the relentless and high-stakes race to build a computer that can solve problems beyond the reach of any conventional machine, a groundbreaking study from the University of California, Santa Barbara has introduced a formidable new contender. While much of the industry’s focus has been on the development of fault-tolerant quantum computers, this research reveals that an alternative approach, the probabilistic computer (p-computer), has already demonstrated the ability to outperform leading quantum systems on certain classes of notoriously difficult problems. This work, led by a team of electrical and computer engineering experts, posits that the p-computer, built from components known as probabilistic bits (p-bits), serves as a powerful and immediately practical technology that is actively reshaping the competitive landscape and forcing a re-evaluation of the timeline for achieving so-called “quantum advantage.”

A New Contender in High-Performance Computing

The core theme emerging from the UCSB research is the powerful validation of p-computing as a mature and promising alternative for tackling hard combinatorial optimization tasks, which are complex problems with profound applications in fields like logistics, drug discovery, and financial modeling. The study underscores a crucial, often overlooked trend in high-performance computing: while the quest for a universal quantum computer continues, specialized classical hardware is evolving at a breakneck pace, continuously raising the performance bar that emerging quantum systems must clear. The consensus viewpoint presented by this research is that the prevailing narrative of imminent quantum supremacy should be tempered by the demonstrated and rapidly advancing power of these sophisticated classical architectures. The work provides a compelling case that for a significant category of problems, the classical pathway is not only viable but, with currently available technology, superior.

This challenge to the quantum status quo was built upon foundational architectural and hardware improvements detailed in a preceding study. Researchers achieved two significant milestones that paved the way for more powerful and efficient p-computers. Firstly, they successfully demonstrated that voltage-controlled magnetism can be employed to create highly efficient p-bits, a critical innovation for improving the energy consumption and scalability of the hardware. Secondly, the team rigorously examined different p-computer architectures, comparing a synchronous design—in which all p-bits update in parallel, much like “dancers moving in lockstep”—against the original asynchronous architecture, where each p-bit updates independently and stochastically. The pivotal finding was that a carefully implemented synchronous architecture could match the performance of the established asynchronous designs, a result that unlocks the door for creating massively parallel and highly scalable chip designs.

Challenging the Quantum Advantage Narrative

Building directly upon this foundational work, a subsequent and more recent paper published in the prestigious journal Nature Communications presented a direct and formidable challenge to the quantum computing field. Led by postdoctoral researcher Shuvro Chowdhury, the expansive team of 14 co-authors set out to specifically evaluate their advanced p-computer design against a leading quantum annealer. The research directly addressed a claim from a private entity that its quantum computer had achieved a significant performance breakthrough on spin-glass problems—a standard class of benchmarks used to test hardware on hard optimization tasks. The UCSB-led team’s findings established that this claim of a quantum leap was premature. Their comprehensive simulations demonstrated that a p-computer, when sufficiently scaled with a large number of p-bits, could hold a decisive edge in both computational speed and energy efficiency for the very same problems.

A particularly fascinating aspect of the project was the serendipitous nature of its core discovery. The initial insight that massive parallelism could yield unexpectedly powerful results came from two visiting Ph.D. students who, while working with simulations involving a very large number of p-bits, observed non-intuitive and highly favorable behavior. According to Chowdhury, this accidental finding spurred a comprehensive, year-long effort by the team to fully understand and develop a coherent theory explaining why this extreme level of parallelism improved performance so dramatically. Once this theoretical breakthrough was achieved, the project pivoted from a scientific inquiry into a practical engineering challenge: could such a massively parallel chip actually be built? By assembling a diverse team of experts, the consensus was a confident “yes,” leading to a two-year project that culminated in the landmark publication.

Redefining the Classical Baseline

The aggregated findings from this body of research are clear and impactful. The researchers showed that by co-designing a p-computer with hardware tailored to implement powerful Monte Carlo algorithms—specifically, discrete-time simulated quantum annealing and adaptive parallel tempering—it is possible to create a compelling and scalable classical system for solving hard optimization problems. Focusing on 3D spin-glass benchmarks, their simulations proved that the p-computer outperformed the leading quantum annealer on the exact same problems. The team designed and simulated a specific chip architecture housing three million p-bits, a scale made possible by modern semiconductor technology. Professor Kerem Çamsarı expressed “100% trust” in the simulations, noting that a leading manufacturer could produce this chip today. By running these large-scale problems, the research provided a definitive answer to the question of quantum supremacy in this context.

This “truly remarkable result,” as praised by ECE department chair Luke Theogarajan, established a new, rigorous, and significantly higher classical baseline. It fundamentally clarified the landscape for assessing practical quantum advantage, demonstrating that claims of quantum superiority must be measured against the best possible classical alternatives, which are themselves a rapidly moving target. The work ultimately showcased the immense power and future of p-bit based computing, revealing that the narrative of a simple quantum-versus-classical race was an oversimplification. Instead, it painted a more complex and exciting picture of a future where specialized, co-designed architectures, both classical and quantum, would compete to solve the world’s most challenging computational problems.