With a deep-seated expertise in emerging technologies like quantum computing and open-source projects, Oscar Vail stands at the intersection of innovation and critical infrastructure. He has consistently championed the modernization of foundational systems, making him a leading voice on the digital transformation sweeping through the energy sector. As utilities grapple with the immense challenge of building a resilient, decarbonized grid, Vail’s insights offer a clear-eyed perspective on the path forward. Today, we delve into the core principles of this transformation, exploring the shift from rigid legacy systems to intelligent, software-defined operations. Our conversation will touch upon the critical breaking points of today’s operational technology, the practical application of AI and digital twins in predictive maintenance, the urgent need for next-generation cybersecurity, and the often-overlooked human element—the leadership and workforce development essential for success. We will also look ahead, examining the collaborative actions required to realize the ambitious vision for the grid of 2035.

Your work often contrasts legacy OT environments with new software-defined architectures. From your perspective, what are the top operational and cybersecurity breaking points of these monolithic systems today, and how does adopting modular components with API-driven interoperability directly solve those specific challenges for a utility?

That’s the central question we’re facing. For decades, traditional OT systems were the bedrock of reliability, and for good reason—they were stable, predictable, and isolated. But that very isolation has become their greatest weakness. Operationally, the biggest breaking point is their inability to adapt to a decentralized energy world. A monolithic system is designed for a one-way flow of power from a central plant. It simply cannot handle the dynamic, two-way flow from thousands of solar panels and wind turbines. Innovation grinds to a halt because a single software update can be a monumental, risky task. On the cybersecurity front, their monolithic nature creates a massive attack surface. Once a threat actor is inside that hardened perimeter, they often have free rein because the internal systems implicitly trust each other. Adopting modular, API-driven components dismantles this old model. Instead of one giant, rigid block, you have a collection of specialized, interoperable services. Operationally, this means a utility can innovate faster, swapping in a new analytics module or a better control algorithm without having to re-engineer the entire system. From a security standpoint, it allows for micro-segmentation and containment, fundamentally changing the game. An attacker might compromise one module, but APIs and zero-trust principles prevent them from moving laterally across the entire network.

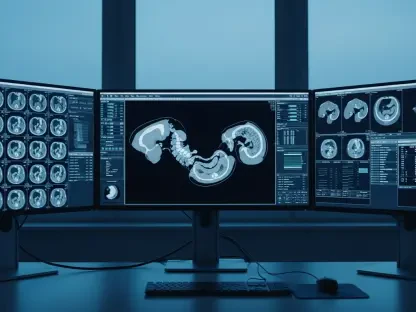

The white paper highlights AI and digital twins as foundational. Could you walk us through a practical example of how a utility would use these tools to enable predictive operations, perhaps sharing a step-by-step process or key metrics they would track to improve grid resilience?

Absolutely. This is where the transformation becomes tangible and incredibly powerful. Imagine a critical substation that serves a large urban area. In the old model, we’d wait for a component to fail during a heatwave, causing an outage, and then scramble to fix it. With AI and digital twins, we move from being reactive to predictive. First, the utility would build a high-fidelity digital twin of that substation—a virtual replica that mirrors its physical counterpart in real time. We’re talking about every transformer, switch, and cable. Second, we feed this twin a constant stream of data from the real world: IoT sensor readings from the equipment, local weather forecasts, and grid load information. Third, an AI engine analyzes this data within the context of the digital twin. It runs thousands of simulations, learning what normal operation looks like under different conditions. It might notice a transformer’s temperature is rising just a fraction of a degree higher than its historical baseline during peak hours, a sign of imminent failure invisible to a human operator. The final step is automated action. The system flags the specific component, predicts a 95% chance of failure within 72 hours, and automatically generates a work order for a maintenance crew with a full diagnostic report. The key metrics they would track are things like ‘System Average Interruption Duration Index’ (SAIDI), which would plummet, and asset lifespan, which would increase. It’s a complete shift from fixing what’s broken to preventing it from ever breaking in the first place, which is the very definition of resilience.

You mention the critical need for zero-trust cybersecurity and post-quantum readiness. What are the first three tangible steps a utility C-suite should take to implement a zero-trust model, and what are the biggest cultural or technical hurdles they should anticipate during this transition?

This is a top priority, and it has to be driven from the C-suite because it’s a fundamental philosophical shift. The first tangible step is to achieve complete visibility. You cannot secure what you cannot see. The leadership team must sponsor an initiative to map every single device, user, and data flow across their entire OT and IT network. It’s a daunting task, but it’s non-negotiable. The second step is to enforce strict identity and access controls. This means moving away from shared or legacy passwords and implementing multi-factor authentication for every user and machine, granting only the minimum level of access—or ‘least privilege’—needed for a specific task. The third step is network micro-segmentation. Once you have visibility, you can start creating isolated zones within the network, so that even if one part is compromised, the breach is contained and cannot spread to critical control systems. The biggest hurdle isn’t technical; it’s cultural. OT engineers have spent their careers building systems based on implicit trust within a secure perimeter. Introducing a model of “never trust, always verify” can feel like it’s adding friction to their workflow. There’s a real fear of disrupting stable, critical processes. Technically, the challenge is retrofitting these modern security principles onto legacy equipment that was never designed for them, which requires careful planning and phased implementation to ensure operational continuity.

This transformation is described as more than a technical upgrade. What specific leadership structures and continuous workforce development programs are essential for building the trust and operational excellence needed to shift from static control systems to adaptive, software-defined operations?

This is probably the most overlooked but most important aspect. You can have the best technology in the world, but if your people and processes aren’t aligned, the transformation will fail. On the leadership front, utilities need to break down the historic silos between IT and OT. This often means creating new governance structures and even new roles, like a Chief Digital Officer, who has authority and accountability across both domains. Leadership must clearly and consistently communicate the “why” behind the change—not just the technical “what”—to build buy-in at every level. For the workforce, it requires a commitment to continuous development. We’re asking grid operators, who were trained to follow rigid, static procedures, to become data-savvy analysts who can collaborate with AI-driven recommendations. This demands new training programs that go beyond button-pushing. We need to create hands-on learning environments, perhaps using the digital twins as safe testbeds, where operators can simulate different scenarios and learn to trust the new automated systems. Building that trust is everything. It’s an iterative process of training, application, and seeing the positive results firsthand, which in turn fosters the operational excellence needed to manage a truly adaptive grid.

What is your forecast for the adoption of these software-defined operations? Are we on track for the 2035 vision, or do you see significant hurdles that could slow us down?

My forecast is one of cautious optimism. The 2035 vision is ambitious, but the technological building blocks—AI, edge computing, open standards—are maturing at an incredible pace. We are seeing pockets of brilliant innovation, with forward-thinking utilities already deploying these concepts and proving their value. However, the path to widespread adoption is not a straight line. The most significant hurdle that could slow us down is not the technology itself, but the inertia of regulation and organizational culture. Utility regulation often incentivizes traditional capital investments over more agile, software-based solutions. If regulators and industry leaders don’t collaborate to create new frameworks that encourage this digital transition, progress will be fragmented. The pace of this transformation will ultimately be determined by our ability to match technological advancements with parallel advancements in policy, governance, and workforce skills. If we can align those forces, particularly in the next two to three years, then I believe the 2035 vision is not only achievable but will become the foundation for an even more resilient and sustainable energy future.